Boston Dynamics' boring new video is its most important yet

The new Atlas humanoid robot doesn't do parkour but has capabilities that can create real value and pave the way for applications in unpredictable environments.

Boston Dynamics’ Atlas humanoid robot has long been known for pre-programmed feats of parkour and dance. A new demonstration, however, shows the humanoid performing a complex, long-horizon manipulation task in a simulated workshop.

Compared to the earlier viral videos of Boston Dynamics’ humanoid robots, this one is very boring. But there is a lot of depth to what the robot has achieved. Instead of executing a rigid script, the robot grasps, moves, and places parts based on a learned policy.

This capability is powered by a new class of machine learning systems called Large Behavior Models (LBMs), a joint effort between Boston Dynamics and the Toyota Research Institute (TRI) that moves the robot from a pre-programmed acrobat to an apprentice that learns from observation.

The power of learning by watching

The core innovation, as detailed by Boston Dynamics, is a single, generalist policy that can guide the robot through a multi-step task based on a high-level language prompt. This approach replaces discrete, hand-coded instructions for each action.

A crucial capability of this learned policy is its ability to react intelligently when things go wrong. If the robot drops a part or a container lid unexpectedly closes, the system can adapt and recover. This is not a pre-programmed contingency plan; the policy learns these recovery behaviors from seeing examples of them in its training data.

This method fundamentally changes how new skills are taught. Instead of requiring engineers with advanced degrees to write complex control algorithms, a new behavior can be programmed by simply demonstrating it. The researchers found the training process is the same whether the task is stacking rigid blocks or folding a t-shirt.

Sample-efficient training

This data-driven approach dramatically accelerates robot training. Foundational research from TRI shows that LBMs, when pretrained on a large and diverse dataset, can learn new and unseen tasks with three to five times less task-specific data. This significantly reduces the time, cost, and engineering effort required to deploy a robot for a new application.

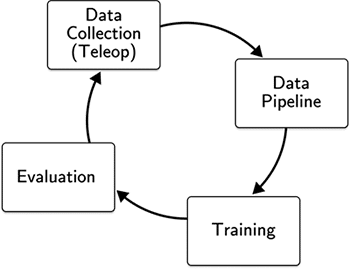

The teams created an efficient "data flywheel" to achieve this. Human operators use a custom VR teleoperation system to provide high-quality demonstrations. This data is fed into a machine learning pipeline to train the policy, which is then evaluated in a high-fidelity simulator before being deployed on the physical robot. This iterative loop allows for rapid and continuous improvement.

An additional benefit emerged at inference time: the learned policies can be executed 1.5 to 2 times faster than the original human demonstrations without a significant drop in performance. Their experiments show that for some tasks, the models can learn to optimize movements beyond the speed limits of human teleoperation.

Under the hood: the large behavior model (LBM)

LBMs operate on a principle similar to the large language models (LLMs) that power modern text-based AI. While LLMs are pretrained on vast amounts of text from the internet to understand language, LBMs are pretrained on thousands of hours of physical robot interaction data to understand how to operate in the physical world.

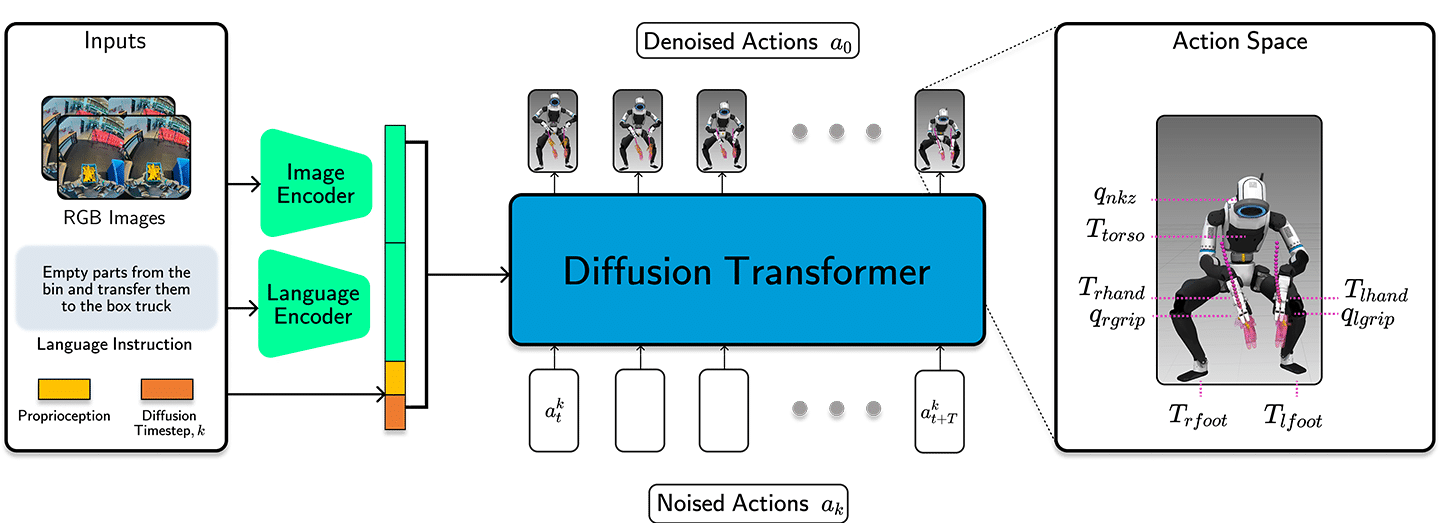

The specific architecture used for Atlas is a 450-million-parameter diffusion transformer. This model processes multiple streams of input data, including images from the robot’s stereo cameras, proprioceptive data about its own body position, and a high-level language prompt describing the task. It then outputs a continuous stream of actions at 30Hz to control all 50 of Atlas’s degrees of freedom. Research from TRI confirmed that AI scaling laws apply here: performance smoothly and consistently improves as more high-quality pretraining data is added to the model.

The foundation model revolution in robotics

The development of the Atlas LBM is a key data point in a broader industry-wide shift. For years, robotics development faced a fundamental challenge: robots were effective specialists but poor generalists. A model trained for a specific robot, task, and environment would often fail if any of those variables changed, forcing researchers to start from scratch.

A new paradigm, inspired by the success of LLMs, proposes that combining data from many diverse robots can create a generalist model superior to any one specialist. This is the central idea behind the Open-X Embodiment project, a collaboration between Google DeepMind and 33 other research labs that created a massive dataset from 22 different robot types.

The results confirmed the hypothesis. A general model called RT-1-X, trained on this diverse dataset, achieved a 50% higher success rate on various tasks compared to specialist models. This proved that robots can learn more effectively by sharing data across different physical forms, or embodiments.

The Atlas LBM is a direct product of this revolution. The TRI team trained its models on a mixture of data that included not only demonstrations from Atlas but also curated data from the public Open X-Embodiment dataset. This cross-embodiment training is a key source of the model's robust, generalizable performance.

At the same time, the knowledge contained in large text corpora used to train language models enables robotics models to perform complex tasks such as reasoning about visual inputs and text prompts that were impossible with previous approaches. These abilities enable the models to plan complex tasks and dynamically update their action plans as the environment changes.

The road ahead is paved with shared data

Both Boston Dynamics and TRI state that this work is just a baseline. The immediate next step is to scale the data flywheel to increase the throughput, quality, and diversity of demonstration data. Their findings affirm that performance will continue to improve as the dataset grows.

Future research directions include incorporating more varied data sources, such as ego-centric video from humans performing tasks, using reinforcement learning to further refine learned policies, and deploying more advanced vision-language-action (VLAs) models for more complex, open-ended reasoning.

The era of programming robots in isolation is giving way to a new model of collective learning. The Atlas LBM demonstrates that the future of robotics depends on creating systems that can learn from the shared experiences of others, regardless of their physical form. The new Atlas videos might not be as exciting as the old ones, but they surely show progress toward creating humanoid robots that can create value in real-world applications.