Contextual document embeddings turbocharge RAG pipelines

How do you improve the embedding quality of encoder models in your RAG pipeline? By making them aware of similar documents and the context of the corpus.

One of the key challenges of RAG pipelines is choosing the right document for the input query. The traditional embedding-based approach to encoding and retrieving documents has an important shortcoming: It is not sensitive to the types and distributions of documents in the knowledge corpus.

Say you have a knowledge corpus that is composed of documents that have similar structure and content with nuanced differences. The classic embedding approached, in which each document is encoded independently and stored in the vector database, will not be able to correctly capture the specific features of each document. As a result, the quality of documents you retrieve through your RAG pipeline will suffer greatly. In fact, when the corpus documents are specialized and have a lot of common features, classic search methods such as BM25 tend to outperform embedding-based search.

Contextual Document Embeddings (CDE), a technique proposed by researchers at Cornell University, solves this problem by making each document embedding aware of the context and content of the rest of the corpus and similar documents.

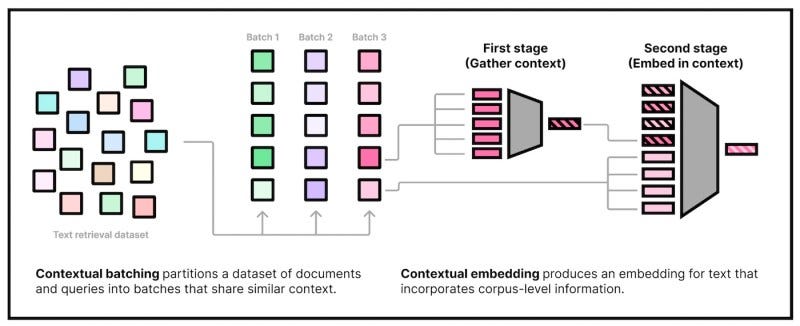

CDE makes two fundamental changes to embedding models to make them context-aware.

First, the training phase is modified so that the training examples are clustered based on their content. At each stage of the contrastive learning phase, the model is trained on positive and negative examples from the same cluster. This forces the model to learn the nuances that separate the documents instead of wasting its capacity on learning repetitive patterns within the cluster.

The second change is a modification to the architecture of the embedding model. The researchers augment the encoder with a mechanism that gives it access to the corpus during the embedding process. This allows the encoder to take into account the context of the document when generating its embedding.

The size of the embedding doesn’t change, so when using CDE, you don’t need to change your storage infrastructure.

The researchers have released a small version of their CDE and their experiments show that it outperforms classic bi-encoders of equal size on many retrieval tasks. CDE is especially effective when your knowledge corpus is very different from the training data of your embedding model. You can think of it as a workaround for fine-tuning embedding models for your application.

Hopefully, the researchers will obtain the resources to test CDE at scale and also apply it to other modalities in the future.

Read more about CDE and my interview with the author Jack Morris on VentureBeat

Read the paper on arXiv

See the model on Hugging Face