DeepCoder-14B challenges leading LLMs in coding at a fraction of the cost

And the entire stack and artifacts are open source for your to run, modify, and adapt to your use cases.

DeepCoder-14B, a new coding model developed by Together AI and Agentica, matches the capabilities of proprietary models at a fraction of the size.

According to the findings published by the team, DeepCoder-14B performs impressively on coding benchmarks such as LiveCodeBench (LCB), Codeforces, and HumanEval+.

"Our model demonstrates strong performance across all coding benchmarks… comparable to the performance of o3-mini (low) and o1," the researchers write in a blog post that describes the model.

The model also shows good transferability across tasks. For example, despite being trained primarily on coding tasks, the model shows improved mathematical reasoning on the AIME 2024 benchmark in comparison to the base model, DeepSeek-R1-Distill-Qwen-14B.

One of the interesting aspects of the project is the challenges the researchers solved while training the model using reinforcement learning (RL).

First, they created a dataset with 24,000 high-quality problems using a specialized pipeline that gathers examples from different datasets and filters them for validity, complexity, and duplication.

They also designed an innovative reward function for the RL training. The function provides a positive signal if the generated code provides a positive outcome and passes all sampled unit tests for the problem within a specific time limit. This prevents the model from reward hacking and other tricks that will score high on the test set but will not perform well on real-world tasks.

They used Group Relative Policy Optimization (GRPO), the RL algorithm also used in DeepSeek-R1, and made some modifications to it that allowed the model to continue improving for longer training runs. They named the algorithm GRPO+.

Another interesting aspect of DeepCoder-14B is how the team scaled the model’s context window, starting with short reasoning sequences and gradually increasing the length. A complementary filtering method, dubbed “overlong filtering,” allows the model to generate longer reasoning chains (CoT) when handling especially difficult problems.

With these techniques, they gradually scaled the context window of DeepCoder-14B from a 16K to a 32K context window. But interestingly, the final model was able to also solve problems that required up to 64K tokens.

The team also developed verl-pipeline, an optimized extension of the open-source verl library for reinforcement learning from human feedback (RLHF), that solves the sampling bottleneck of RL training.

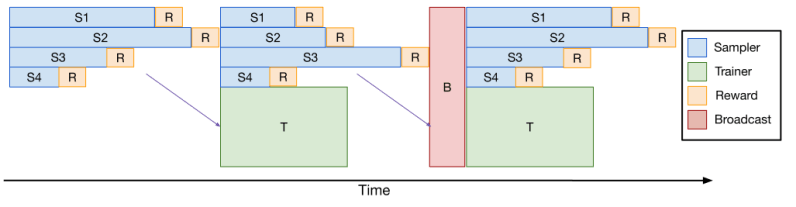

verl-pipeline uses a technique called “One-Off Pipelining,” which rearranges the response sampling and model updates in a way that optimizes GPU usage without allowing variable sample sizes to block different steps of the RL training.

Compared to the baseline implementation, one-off pipelining speeds can boost the RL speed by up to 2X. Thanks to this optimization, DeepCoder-14B was trained in 2.5 weeks on 32 H100s.

The researchers have made all the artifacts for training and running DeepCoder-14B available on GitHub and Hugging Face under a permissive license.