DeepMind's Mind Evolution uses search and genetic algorithms to improve planning in LLMs

Let the model evolve a population of solutions. You'll be surprised by the results.

In a new paper, researchers at Google DeepMind have introduced “Mind Evolution,” an inference-time scaling technique that improves the ability of LLMs in planning and reasoning tasks.

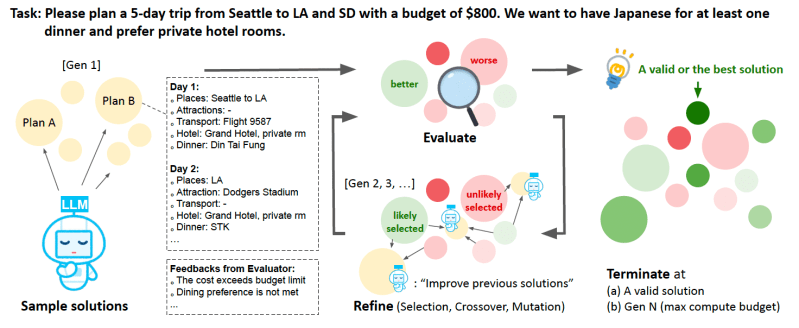

Mind Evolution relies on two key components: search and genetic algorithms. It starts by using an LLM to generate multiple candidate solutions expressed in natural language. The LLM then uses a “critic” prompt to evaluate each candidate and improves it if it does not meet the criteria of the solution.

The algorithm then uses the genetic algorithm to spawn new candidate solutions. It does so by sampling “parent” solutions from the existing population, with higher-quality solutions having a greater chance of being selected. It then uses crossover (choosing parent pairs and combining their elements to create a new solution) and mutation (making random changes to newly created solutions) to create new solutions. Finally, it reuses the evaluation algorithm to refine the new solutions.

Mind Evolution will repeat the cycle of evaluation, selection, and recombination until it reaches an optimal solution.

One of the challenges of evaluating LLM outputs in planning and reasoning tasks is that for many tasks, the problem has to be formalized from natural language into a structured, symbolic representation that can be processed by a solver program. Mind Evolution has been designed to work directly with natural language task descriptions. This allows the system to avoid formalizing problems as long as a programmatic solution evaluator is available. It also means that the evaluator can provide textual feedback, which makes it easier to understand and investigate the reasoning process.

The researchers tested Mind Evolution against multiple baselines, including single-pass inference, Best-of-N, and Sequential-Revision+, a revision technique where 10 candidate solutions are proposed independently, then revised separately for 80 turns (it is similar to Mind Evolution but without the genetic algorithm component). For reference, they also include an additional 1-pass baseline that uses OpenAI o1-preview, which is very advanced but also very expensive.

They carried out most tests on the fast and affordable Gemini 1.5 Flash. Where the Flash model couldn’t solve the problem, they used a two-stage approach that does additional inference with Gemini 1.5 Pro.

As benchmarks, they used trip and meeting planning tasks that are very difficult to solve for LLMs without formal solvers. In all their tests, Mind Evolution outperformed the baselines by a wide margin all while using a fraction of the inference costs.

Mind Evolution achieves a 95% success rate on the TravelPlanner benchmark as opposed to 5.6% for Gemini 1.5 Flash and 11.7% for o1-preview. On the Trip Planning benchmark, Mind Evolution achieves 94.1% against a maximum 77% success rate for the next best method (Sequential-Revision). And the performance gap between Mind Evolution and other techniques increases as the number of cities grows, indicating its ability to handle more complex planning tasks. With the two-stage process, Mind Evolution reached near-perfect success rates on all benchmarks.

This is the latest of several inference-time scaling techniques that aim to improve the capabilities of LLMs by allowing them to use more compute cycles to “think” about their answers, review them, and come up with refined outputs.