Does aligning LLMs with human cognition come at the cost of less powerful models?

To make AI more human-like, must we sacrifice its power? A new study shows why LLM efficiency creates a gap in understanding.

A new paper from researchers at Meta, Stanford, and Wand.AI investigates a fundamental question: Do large language models (LLMs) organize information in the same way humans do?

The study, whose authors include prominent names such as Yann LeCun and Dan Jurafsky, reveals that while LLMs show a surprising ability to form broad concepts similar to our own, they follow a starkly different strategy for processing information, prioritizing aggressive statistical compression over the contextual richness that defines human thought.

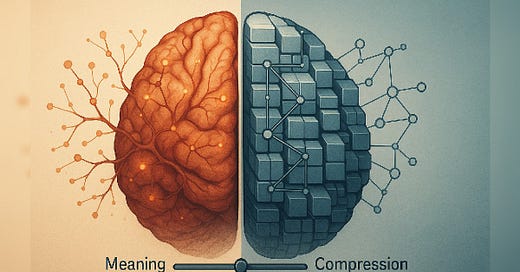

The compression-meaning tradeoff

The research centers on the tradeoff between compression and meaning. Humans constantly perform this balancing act. To manage information overload, we group diverse instances into compact categories; for example, a “robin” and a “blue jay” are both “birds.” This is compression. At the same time, we preserve essential details; we know a robin is a more typical bird than a penguin (which can’t fly like most birds). This is preserving meaning, or semantic fidelity. The study explores whether LLMs strike this same balance.

To systematically compare information representation strategies in humans and LLMs, the researchers developed a novel framework based on information theory, particularly Rate-Distortion Theory (which explores the dynamics of data compression and loss of information). This approach allowed them to quantitatively measure how any system, human or AI, balances representational complexity (the cost of storing information) against semantic distortion (the loss of meaning).

The researchers applied their novel framework in a three-stage process. First, they used embeddings and clustering algorithms to examine how information is condensed into broad categories in humans and LLMs and how well they align.

Second, they assessed how well meaning is preserved within these compressed representations, probing whether LLMs can capture the fine-grained internal structure of human concepts, like item typicality.

Finally, they used their full framework to quantitatively score the overall efficiency with which each system navigates the fundamental trade-off.

The analysis compared a diverse suite of LLMs against a robust human baseline. The models included encoder-only architectures like BERT and decoder-only models such as Llama, Gemma, and Qwen, with parameter counts ranging from 300 million to 72 billion. The human data was sourced from seminal, meticulously curated cognitive psychology studies from the 1970s that mapped how people form categories and perceive item typicality.

Convergences and divergences

The first key finding is that LLMs get the big picture right. When the models’ embeddings were clustered, they formed broad conceptual categories that significantly aligned with human-defined ones. As the paper states, “These findings confirm that LLMs can recover broad, human-like categories from their embeddings.” Notably, this ability wasn't just a function of scale. Certain smaller encoder models, like BERT-large, showed surprisingly strong alignment, in some cases outperforming much larger decoder-only models.

However, the devil is in the details. While the broad categories matched, the internal structure of those categories did not. LLMs struggled to capture the fine-grained semantic nuances that are crucial to human understanding, such as item typicality. Their internal representations showed only a modest alignment with human judgments about which items are better examples of a category.

The researchers suggest this is because humans and LLMs rely on different criteria. As the paper explains, “The factors driving an item’s embedding similarity to its category label’s embedding in LLMs may differ from the rich, multifaceted criteria (e.g., perceptual attributes, functional roles) underpinning human typicality.”

This leads to the most critical finding: LLMs and humans are optimizing for fundamentally different objectives. Based on information theory measures, LLMs achieve a more "optimal" balance because they have a strong bias toward aggressive statistical compression. In contrast, human conceptual systems appear less efficient by this measure. This "suboptimality" is likely a feature, not a bug, enabling humans to adapt more dynamically to the messiness and unpredictability of the real world.

Why it matters

The divergent compression-meaning strategies of LLMs and humans have significant implications for AI development. LLMs' aggressive compression may be what enables them to store vast amounts of knowledge so efficiently. But on the other hand, it also contributes to their unpredictability, which makes them prone to failures such as prompt injection attacks. This makes it difficult to deploy them in safety- and mission-critical applications without human oversight.

Certainly, LLMs that are better aligned with human cognition would also lead to more reliable and robust AI systems.

This raises a challenging question for the field: does achieving better human alignment require sacrificing some of this raw knowledge, or will it demand models that are orders of magnitude larger?

The path forward may not be just about scaling up. For example, the study finds that architectural design and pre-training objectives significantly influence a model’s conceptual abilities, proven by the impressive abilities of the very small BERT models.

Some of these findings can open important avenues for future research. Rather than optimizing purely for next-token prediction, future efforts could explore objectives that encourage richer conceptual structures. As the authors conclude, progressing AI “from tokens to thoughts” will require embracing principles that cultivate this richer, context-aware conceptual structure.

A deeper alignment with human cognition is a key step toward building AI systems that are not only more capable but also more reliable and autonomous.

These are very good initial findings pitching processing efficiency vs semantic precision. The study reported in the paper is not comprehensive, though. In addition to semantic precision humans also excel in pragmatic appropriateness, which involves many parameters including understanding intentions of speaker/producer and hearer/consumer, their level of general and specialized knowledge of the world and of language, and any biases/preferences. Our group views these requirements as foundational and seeks to find cognitively motivated ways of enhancing efficiency while not paying too steep a price of semantic imprecision and pragmatic inaproppriateness. See, e.g., https://direct.mit.edu/books/oa-monograph/5833/Agents-in-the-Long-Game-of-AIComputational