Exploring the emergent abilities of large language models

Large language models (LLMs) have become the center of attention and hype because of their seemingly magical abilities to produce long stretches of coherent text, do things they weren’t trained on, and engage (to some extent) in topics of conversation that were thought to be off-limits for computers.

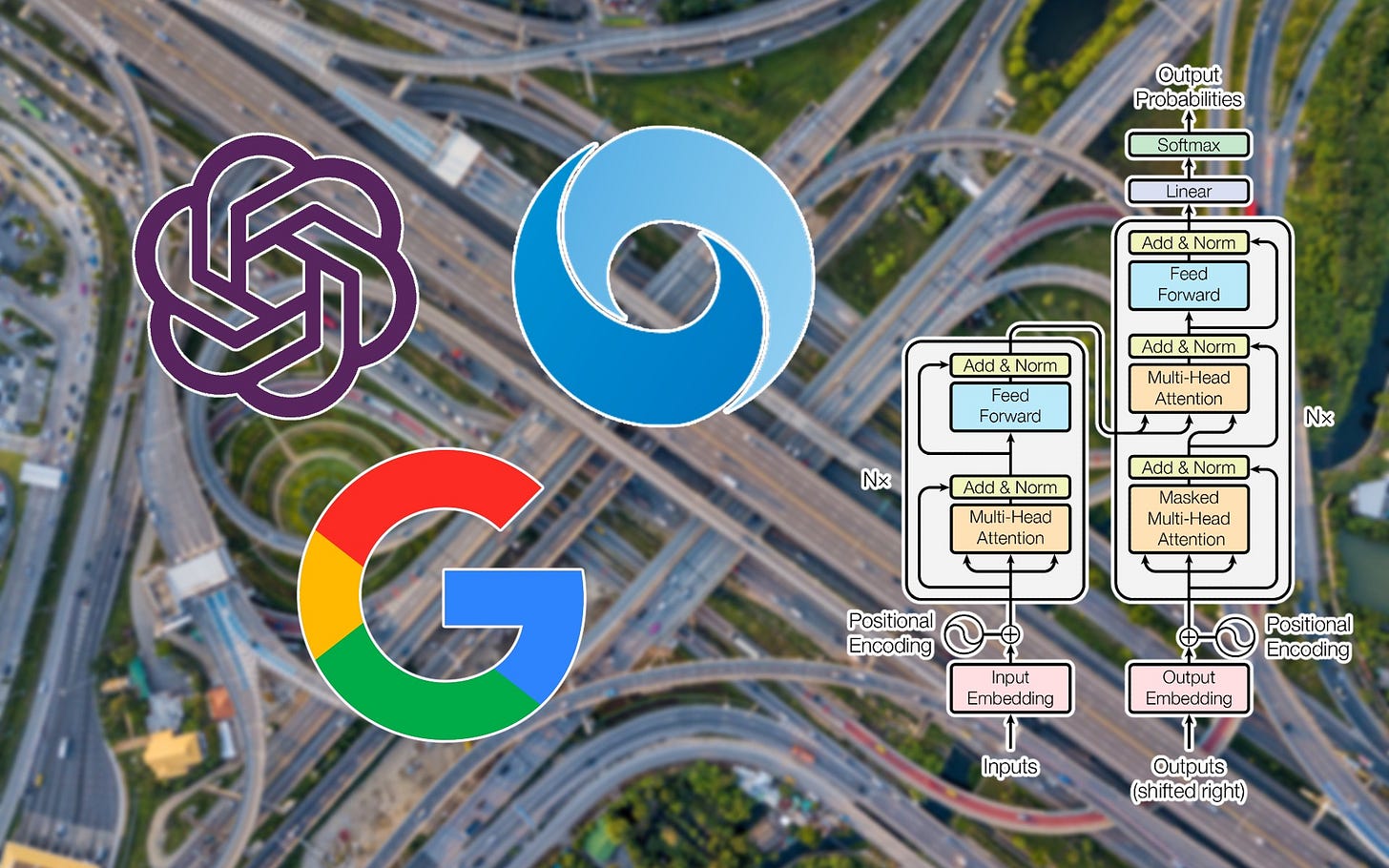

But there is still a lot to be learned about the way LLMs work and don’t work. A new study by researchers at Google, Stanford University, DeepMind, and the University of North Carolina at Chapel Hill explores novel tasks that LLMs can accomplish as they grow larger and are trained on more data.

The study sheds light on the relation between the scale of large language models and their “emergent” abilities.

Read the full article on TechTalks.

For more on LLMs: