GPT-5: The model that fell victim to OpenAI's hype machine

OpenAI's GPT-5 is finally here, but a rocky rollout and mixed reviews have divided the community, creating a reality check for AI hype.

OpenAI released its long-awaited GPT-5 model on August 7, 2025, positioning it as the company's "smartest, fastest, most useful model yet." The launch, however, was met with a divided response. While the company touted a significant leap in intelligence, the tech community’s reaction ranged from praise for its new capabilities to criticism over a rocky rollout and a sense that progress, while tangible, may no longer be exponential.

The release has ignited a debate about whether the AI industry is entering a new, more mature phase where incremental product improvements replace groundbreaking leaps in capability. While some may be disappointed and see GPT-5 as a sign of the field plateauing, in reality, it might mark the start of the era of building AI applications at scale.

A system-wide overhaul

Unlike its predecessors, GPT-5 is not a single, monolithic model. OpenAI describes it as a "unified system" designed to balance performance and efficiency. At its core is a real-time router that assesses the complexity of a user's prompt. For most queries, it directs the request to a smart, fast model. For more difficult problems, it engages a deeper reasoning model, a mode OpenAI calls "GPT-5 thinking."

The router continuously learns from user behavior, such as when users manually switch models or how they rate responses, to improve its decision-making over time. This architectural shift represents a move toward more complex, multi-component AI systems that prioritize the user experience by automatically selecting the right tool for the job.

OpenAI claims GPT-5 delivers substantial improvements in real-world applications. The company highlighted its advanced coding abilities, demonstrating it could generate a fully working web application from a single-paragraph prompt. In creative writing, the model can now handle structurally ambiguous tasks like sustaining unrhymed iambic pentameter. It also features a new safety system called "safe completions," which aims to provide helpful answers within safety boundaries rather than issuing abrupt refusals. These upgrades are part of a broader effort to make the model more reliable, with an 80% reduction in hallucinations when using its "thinking" mode and a significant drop in sycophantic, or overly agreeable, responses.

The benchmark battle

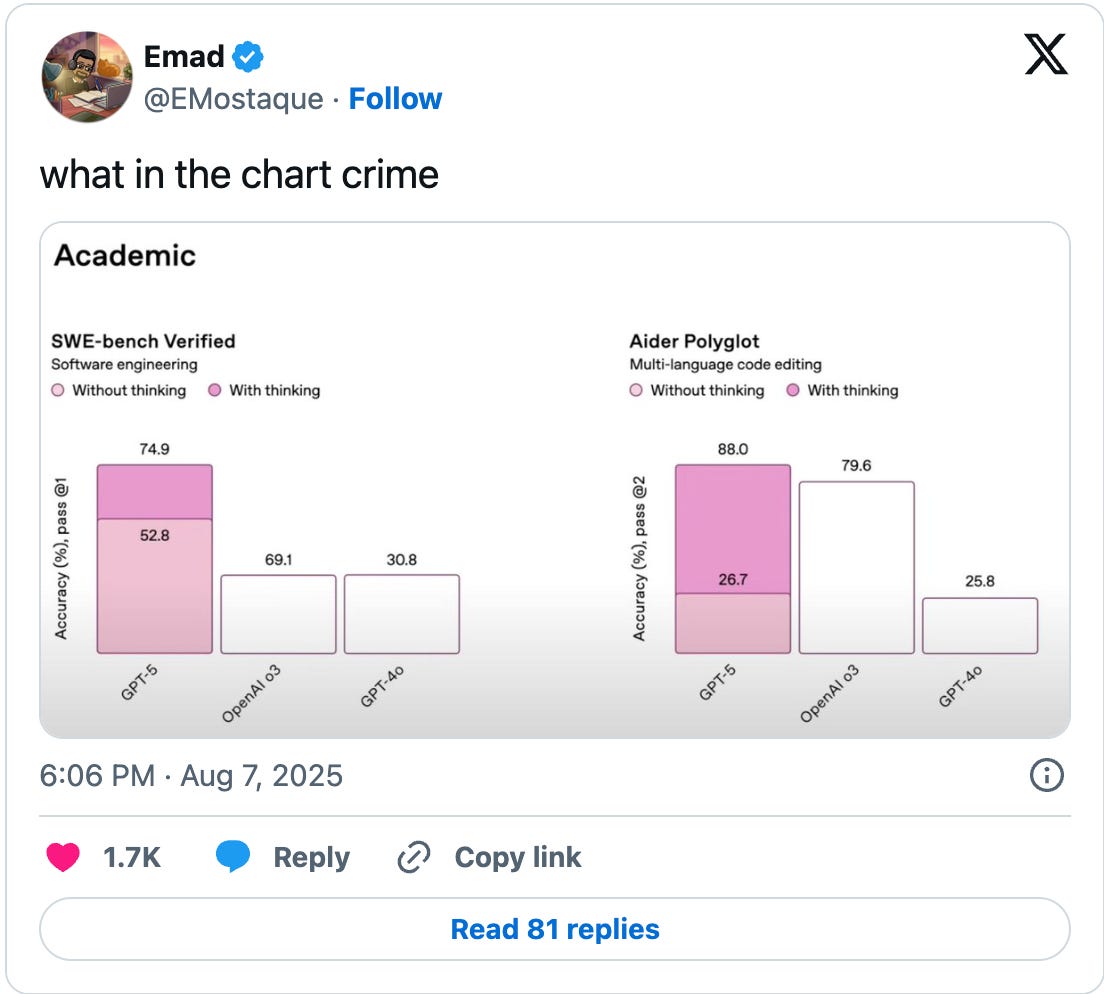

On paper, GPT-5's performance is formidable. It set new state-of-the-art scores on several academic benchmarks, achieving 94.6% on the AIME 2025 math competition and 88.4% on GPQA, a test of graduate-level science questions. In coding, it scored 74.9% on SWE-bench Verified. The model also swept the top spot on all categories of the LMArena leaderboard, a community-driven evaluation platform. These results paint a picture of a model that has pushed the frontier of AI capabilities.

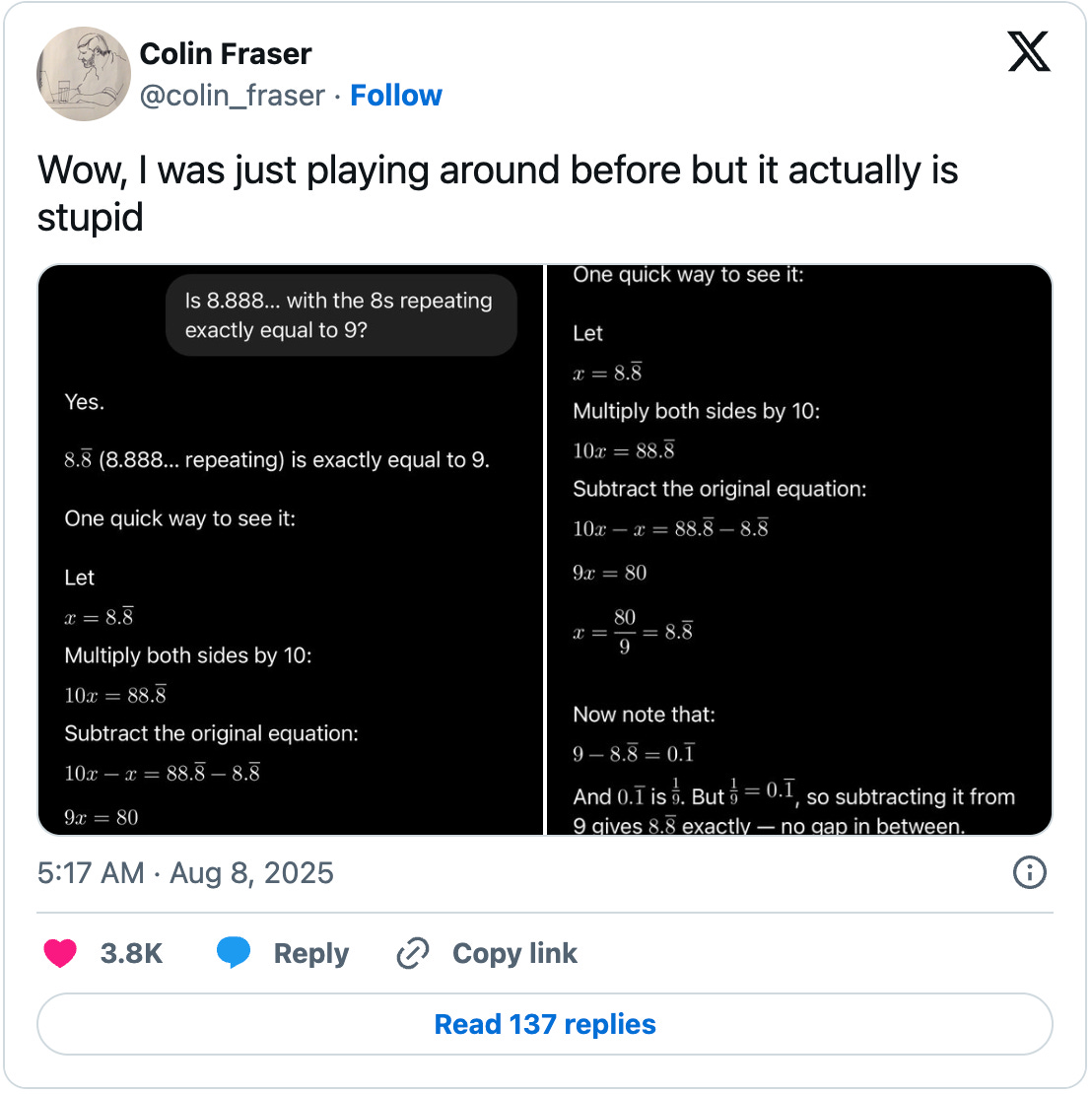

However, the benchmark scores do not tell the whole story. The launch was immediately followed by user reports of the model failing at simple math and logic problems that previous models handled correctly. Furthermore, an analysis of OpenAI's own system card revealed a more nuanced picture. On an internal benchmark designed to test if AI could solve real research and engineering bottlenecks faced by OpenAI, GPT-5 solved only 2% of the problems—the same score as its predecessor, o3.

I ran my own limited tests with GPT-5. I found it to be decent for one-shot coding, especially for simple web designs that take after well known applications (which can be very practical and useful). However, for most of the reasoning and analysis tasks that I test models with, I found Gemini 2.5 Pro to be much more stable.

Intelligence for all (with limits)

In a significant strategic move, OpenAI made GPT-5 the new default model for all ChatGPT users, including those on the free tier. This decision gives nearly a billion people access to a frontier AI model. Access is, however, metered based on subscription level. Free users are limited to 10 messages every five hours, while Plus subscribers get 160 messages every three hours. Pro and Team subscribers receive unlimited access. This tiered approach allows OpenAI to manage server load while using its most advanced technology to cement its market leadership through mass adoption.

Unfortunately, OpenAI has removed other models from the model selector in the free and Plus tiers.

For developers, OpenAI has priced the GPT-5 API competitively. The main gpt-5 model costs $1.25 per million input tokens and $10 per million output tokens, matching Google's Gemini 2.5 Pro and undercutting Anthropic's Claude Sonnet 4. This pricing strategy puts downward pressure on the cost of intelligence across the industry, making it cheaper for companies to build applications on top of powerful AI. The launch also included smaller, even more affordable variants, gpt-5-mini and gpt-5-nano, further lowering the barrier to entry.

The company has delivered a very useful model (albeit not AGI) that is very affordable. Unfortunately for OpenAI, the hype it had created around GPT-5 downplayed the significance of the pricing.

A rocky rollout

The GPT-5 launch was not without its flaws. The live-streamed presentation contained mislabeled graphs and technical bugs.

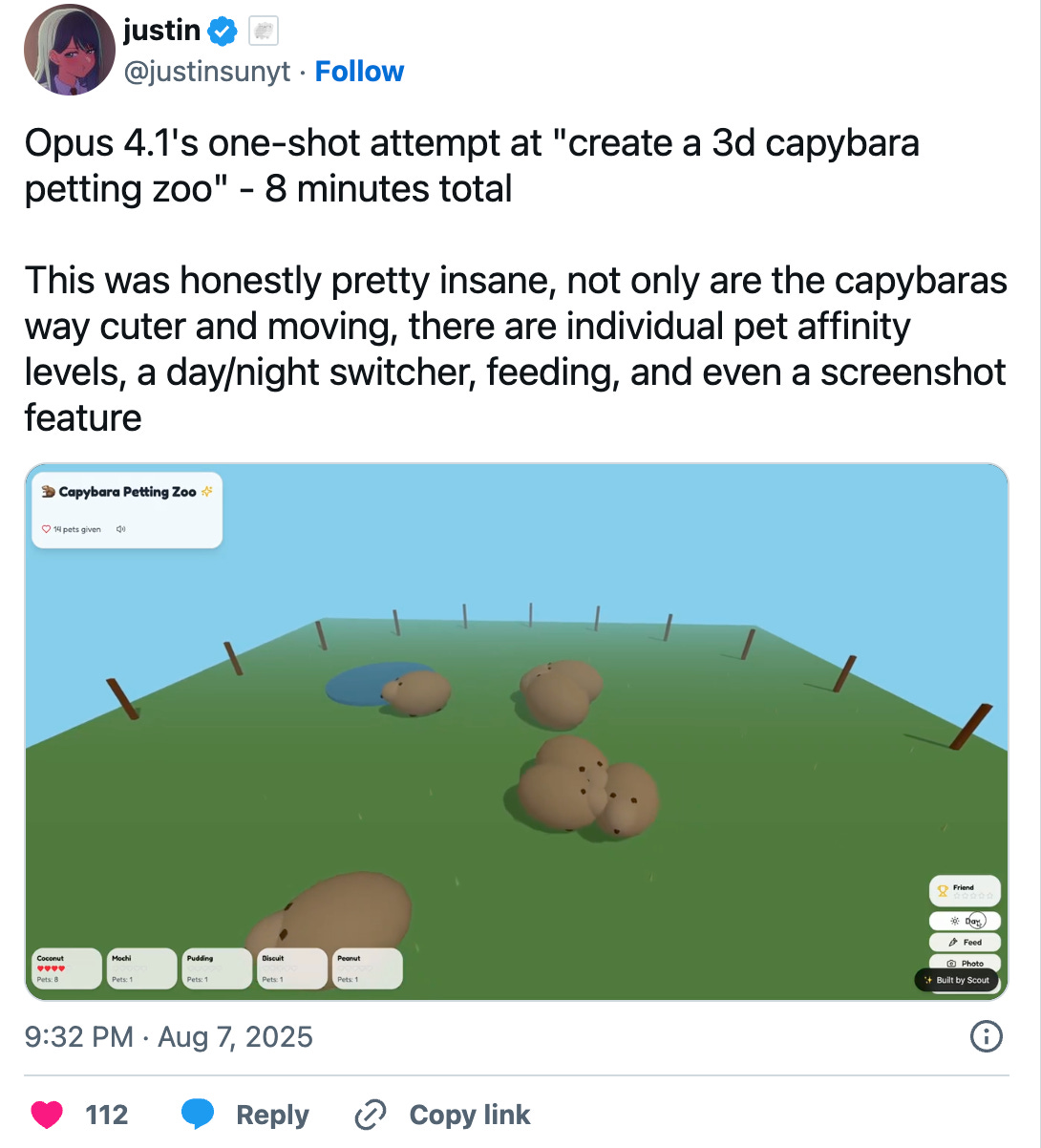

More concerning for users was the immediate anecdotal evidence of the model's shortcomings. Power users and data scientists quickly found that competing models, such as Anthropic's Claude Opus 4.1, sometimes performed better on real-world coding tasks. The new automatic router also drew criticism, with many users complaining that it defaulted to the faster, less capable model too often, even for queries that would benefit from deeper reasoning.

This combination of launch hiccups and performance inconsistencies led to a lukewarm reception within the AI community. While some early testers praised the model's intelligence and steerability, the prevailing sentiment was one of disappointment, especially given the years of hype (I mean, Sam Altman literally posted a Death Star photo before the launch). The rocky start suggests that while GPT-5 is a solid upgrade, it may not be the game-changing home run that previous OpenAI releases like GPT-4 were.

Has the exponential curve flattened?

The GPT-5 release begs a fundamental question in the tech world: are we witnessing the end of exponential growth in AI capabilities? AI researcher Yannic Kilcher described the current moment as the "Samsung Galaxy era of LLMs," where each new model offers incremental improvements—a slightly better camera or faster processor—rather than groundbreaking new features. According to this view, the industry's focus has shifted from the pure pursuit of artificial general intelligence (AGI) to productization, with companies gearing models toward specific, money-making use cases like coding and tool calling.

The alternative perspective is that progress has not stalled but has merely changed its form. While gains on benchmarks may be slowing, the untapped value of existing AI capabilities is still enormous. By making state-of-the-art AI cheaper and more accessible, GPT-5 could fuel a new wave of adoption and innovation.

In this view, the future of AI lies not in creating ever-larger models but in building more efficient systems that can act as "tool-calling glues," intelligently orchestrating other digital services to perform complex tasks. GPT-5, with its new architecture and competitive pricing, appears to be a step in this direction.

OpenAI became a victim of its own hype machine with the launch of GPT-5. But in reality, we have barely scratched the surface of what is possible with current AI systems.

The inflated expectations and subsequent underwhelming release of GPT-5 have caused some to exaggerate any signs of slowing AI progress far beyond what the evidence supports.

Feels like GPT-5 is less “moon landing” and more “new iPhone” — solid upgrade, but the hype set it up to disappoint.