How Differential Transformer improves long-context RAG

Sometimes, the solution is a simple idea. You just need to know what questions to ask.

Differential Transformer, a new LLM architecture by researchers at Microsoft Research and Tsinghua University, improves RAG performance by amplifying attention to relevant context and filtering out noise.

Multiple studies show that the attention mechanism of the classic Transformer architecture suffers from “lost-in-the-middle” context, where it doesn’t give enough weight to the tokens that are relevant to the task at hand.

The problem, according to the researchers, is the softmax function used to calculate attention. This problem becomes worse as the context becomes longer, as it causes the LLM to be distracted by irrelevant content contained in its prompt.

Diff Transformer, the proposed architecture, solves this problem with what the researchers call “differential attention,” a mechanism that cancels out noise and amplifies the attention given to the most relevant parts of the input.

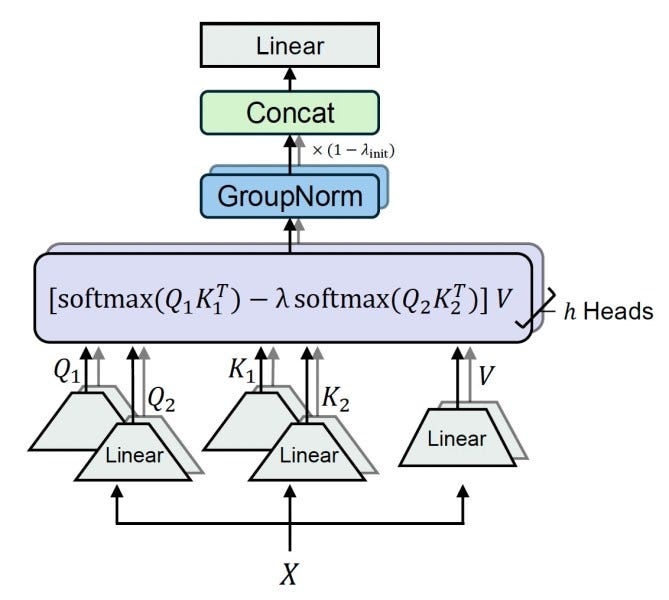

The Transformer uses three vectors to compute attention: query, key, and value. The classic attention mechanism performs the softmax function on the entire query and key vectors.

Differential attention partitions the query and key vectors into two groups and calculates two separate softmax attention maps. The difference between these two maps is then used as the attention score. This process eliminates common noise, encouraging the model to focus on information that is pertinent to the input.

The mechanism is similar to how noise-canceling headphones or differential amplifiers work, where the difference between two signals cancels out common-mode noise.

The researchers evaluated Diff Transformer on models from 3 billion to 13 billion parameters and context lengths of up to 64k tokens. Diff Transformer consistently outperformed the classic Transformer architecture across different benchmarks with considerably fewer parameters and training tokens.

The researchers have released the code for Diff Transformer, implemented with different attention and optimization mechanisms. The architecture can help improve performance across various LLM applications, especially when using very long prompts embedded with a lot of knowledge.

Read more about Diff Transformer and my interview with the author on VentureBeat

Read the paper on arXiv

Check on the code on GitHub