How LLMs can internalize System 2 techniques

A new paper from Meta shows how LLMs can distill and automate System 2 techniques

System 1 and System 2 refer to two distinct modes of thinking. System 1 thinking is fast, intuitive, and automatic. We use it to recognize patterns and make quick judgments. System 2 thinking, on the other hand, is slow, deliberate, and analytical. It requires conscious effort and is used for complex reasoning tasks, such as solving mathematical equations, driving in unfamiliar neighborhoods, and planning a trip.

Zero-shot prompting with LLMs is considered analogous to System 1 thinking. They can respond quickly but struggle with tasks requiring deliberate reasoning and planning. Prompting techniques such as Chain of Thought, System 2 Attention, and Rephrase and Respond are known to improve the capability of LLMs to perform System 2 tasks.

However, System 2 prompting techniques are slow and expensive as they require multiple prompts to the LLM and generate a lot of tokens.

In a new paper, researchers at Meta FAIR present “System 2 distillation,” a technique that enables LLMs to internalize System 2 techniques. System 2 distillation is the LLM equivalent of the human mind’s ability to transfer skills from System 2 to System 1. For example, driving, dribbling, tying shoelaces, and navigating an unknown neighborhood require conscious effort at first. But as you repeat them, you get to do them subconsciously without actively thinking about them.

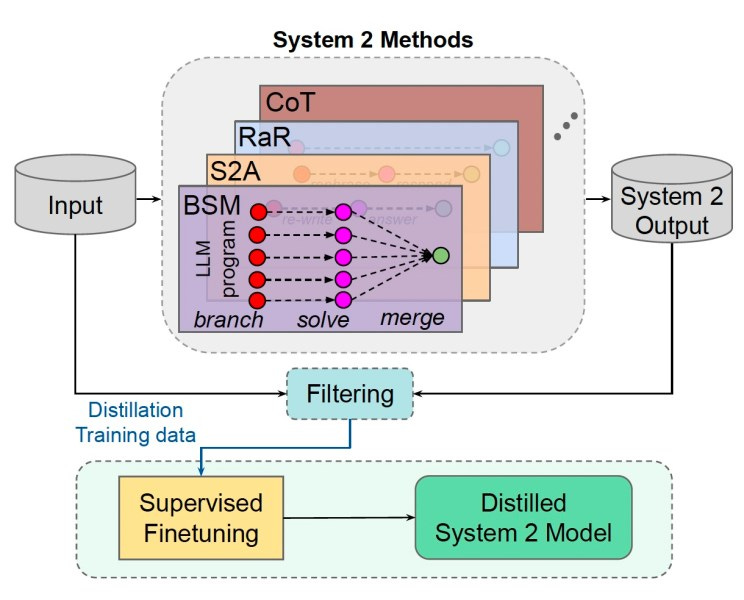

System 2 distillation has a similar process. It works in two stages. First, it gets the model to perform reasoning tasks by using a System 2 prompting technique (e.g., CoT). It then verifies the answers and keeps the ones that are correct. It removes the reasoning tokens and only keeps the question and answer while discarding the reasoning tokens. This creates the distillation dataset.

In the second stage, the model is fine-tuned on the distillation dataset. The intuition is that if the model is trained on the question and the answer obtained after the System 2 process, it will internalize the reasoning process.

The researchers’ experiments with System 2 distillation and several prompting techniques show that the models become better at performing the task with fewer tokens. There are questions, however, on how System 2 distillation affects the model’s broader performance on other tasks.

But without a doubt, this can be an important optimization techniques for LLMs that are meant to perform a specific task in a pipeline.

Read the full paper on arXiv.

Read more about System 2 distillation on VentureBeat.