How Nvidia solved the accuracy tradeoff of training 4-bit LLMs

NVFP4 allows training 4-bit LLMs that achieve FP8-level accuracy while slashing memory and compute requirements.

Researchers at Nvidia have developed a new approach to train large language models (LLMs) in 4-bit format while preserving their stability and accuracy. The new technique, called NVFP4, makes it possible to train quantized models that match the performance of larger 8-bit models at half the memory and a fraction of the compute costs.

The success of NVFP4 shows a path toward cutting the costs of AI by running leaner models that match the performance of larger ones. It can also pave the way for a future where the costs of training LLMs will drop to a point where training custom models becomes more accessible.

The challenge of quantization

At their core, neural networks are massive mathematical functions that process information by performing calculations on large matrices of numbers, or tensors. The precision of these numbers (the number of bits used to store each one) directly affects the model’s performance and resource requirements. Full-precision formats like 32-bit floating point (FP32) or 16-bit bfloat (BF16) offer high accuracy but demand significant memory and computational power. Quantization is a technique that reduces the number of bits used to represent these numbers, making models smaller, faster, and more energy-efficient. The main challenge of quantization is to reduce the size of models while minimizing accuracy loss.

As LLMs have grown, 8-bit floating point formats (FP8 and MXFP8) have become a popular way to accelerate training by balancing performance with resource savings. The smaller 4-bit floating point (FP4) can further halve memory usage again and further boost performance on modern hardware. However, this transition is not simple. Squeezing numbers into just 4 bits introduces significant challenges, often leading to a trade-off between efficiency and accuracy. Existing 4-bit formats like MXFP4 have struggled to match the performance of their 8-bit counterparts, making them less practical for training state-of-the-art models.

How NVFP4 works

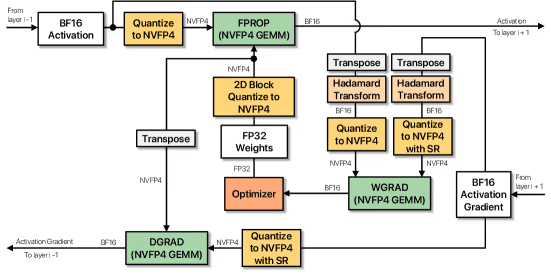

NVFP4 is designed to fix the instability of other 4-bit formats. With only 4 bits, you can represent just 16 distinct values. This extremely limited range makes the format highly sensitive to outliers (unusually large or small numbers). During the conversion from a higher-precision format, these outliers can distort the entire set of values, leading to a loss of information. NVFP4 introduces a more sophisticated two-level scaling system that better preserves the original distribution of numbers.

Beyond the format itself, the researchers introduced a full training recipe and architectural recommendations that enables NVFP4 to achieve accuracies comparable to FP8. A key part of this methodology is a mixed-precision strategy. Most layers in the model are computed in NVFP4 to maximize efficiency. But a small fraction of numerically sensitive layers are kept in a higher-precision format like BF16. This hybrid approach ensures training remains stable while taking advantage of the benefits of 4-bit computation. The researchers also adjusted how gradients (the signals used to update the model during training) are calculated to reduce the systematic errors, or bias, that quantization can introduce.

Putting NVFP4 into practice

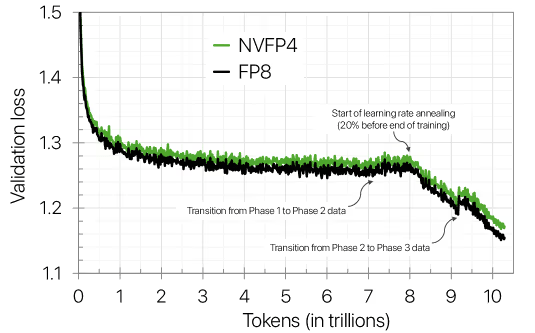

The Nvidia team applied NVFP4 and their training recipe on a 12-billion-parameter hybrid Mamba-Transformer model. Their experiments show that the model trained with NVFP4 performed nearly identically to a baseline model trained with the more resource-intensive FP8 format. The training loss tracked the FP8 baseline closely, with only a tiny divergence appearing late in the process. More importantly, this small gap in loss did not affect the model’s performance on a wide range of downstream tasks, including knowledge-intensive reasoning, mathematics, and commonsense understanding. The only exception was in coding tasks, where the NVFP4 model fell slightly behind.

When compared to the alternative 4-bit format, MXFP4, the benefits of NVFP4 became even clearer. In an experiment with an 8-billion-parameter model, NVFP4 converged to a lower training loss than MXFP4. To match the performance achieved by NVFP4 after training on 1 trillion tokens, the MXFP4 model needed to be trained on 36% more tokens, increasing training time and cost.

The work represents a significant milestone in making AI development more efficient and accessible. As the paper concludes, “This marks, to our knowledge, the first successful demonstration of training billion-parameter language models with 4-bit precision over a multi-trillion-token horizon, laying the foundation for faster and more efficient training of future frontier models.”