How self-invoking code benchmarks test LLMs for practical programming tasks

Practical uses of LLMs in coding involve helping developers solve incrementally more difficult tasks that build on top of existing code.

LLMs have already saturated most simple coding benchmarks such as HumanEval and MBPP. A new paper by Yale University and Tsinghua University suggests the next step in evaluating LLMs on more complex coding tasks. They suggest “self-invoking code generation” problems as a way of measuring how LLMs can reason over and reuse existing code or the code they have generated themselves.

Self-invoking is important because in most practical programming settings, developers must repurpose and reuse existing code to solve more complex problems. In contrast, simple benchmarks such as HumanEval and MBPP focus on generating single functions that solve a very specific problem.

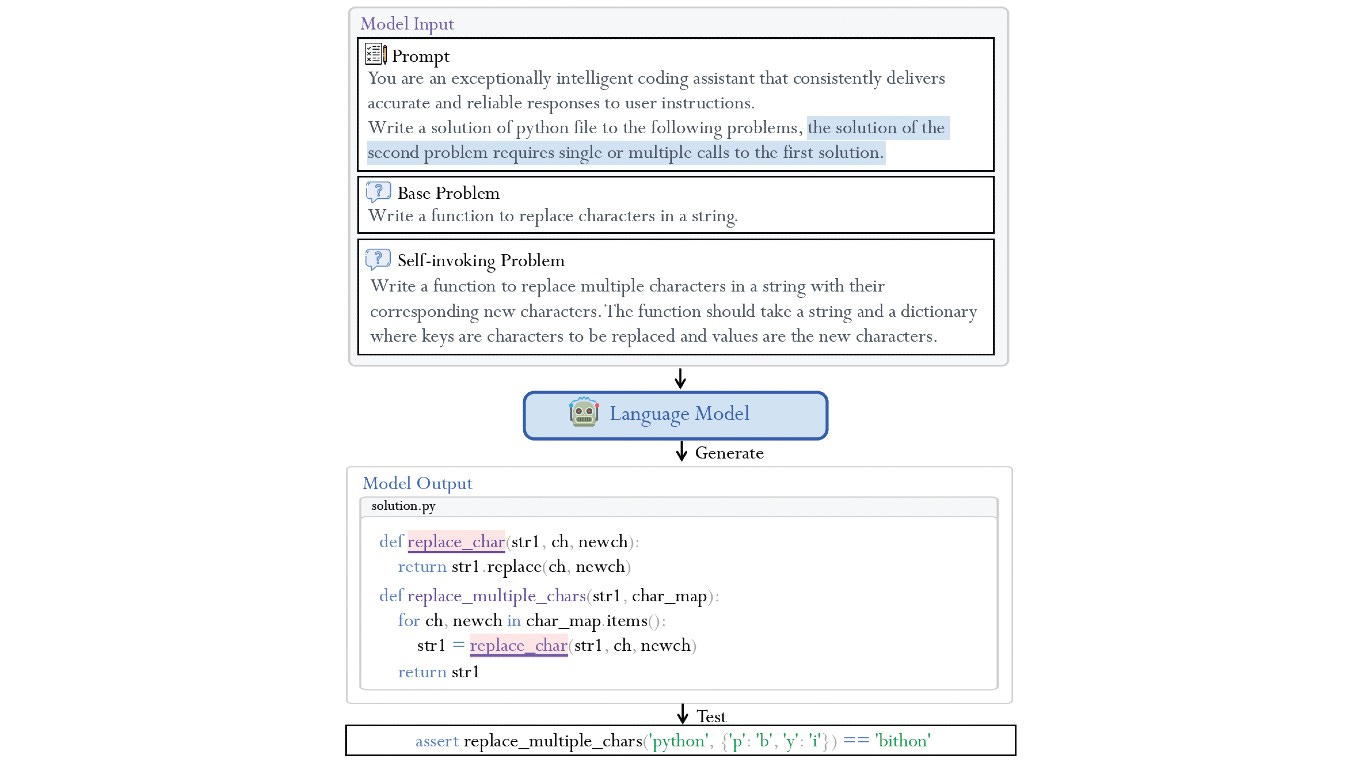

The researchers created two new benchmarks, HumanEval Pro and MBPP Pro, which add self-invoking code generation elements to the problems in the original datasets. For example, if the original problem was to write a function that replaces all occurrences of a given character in a string with a new character, the extended problem would be to write a function that changes occurrences of multiple characters in a string with their given replacements.

The researchers tested HumanEval Pro and MBPP Pro on GPT-4o, OpenAI o1-mini, Claude 3.5 Sonnet, and other models.

The results show a significant gap between simple coding benchmarks and self-invoking code generation tasks. For example, with a single generation (pass@1), o1-mini achieves 96.2% on HumanEval but only 76.2% on HumanEval Pro.

Interestingly, there is a significant in self-coding capabilities between base models and instruction fine-tuned models. But that gap is not present in self-invoking code generation abilities, suggesting that we need to rethink how we train base models for coding and reasoning tasks.

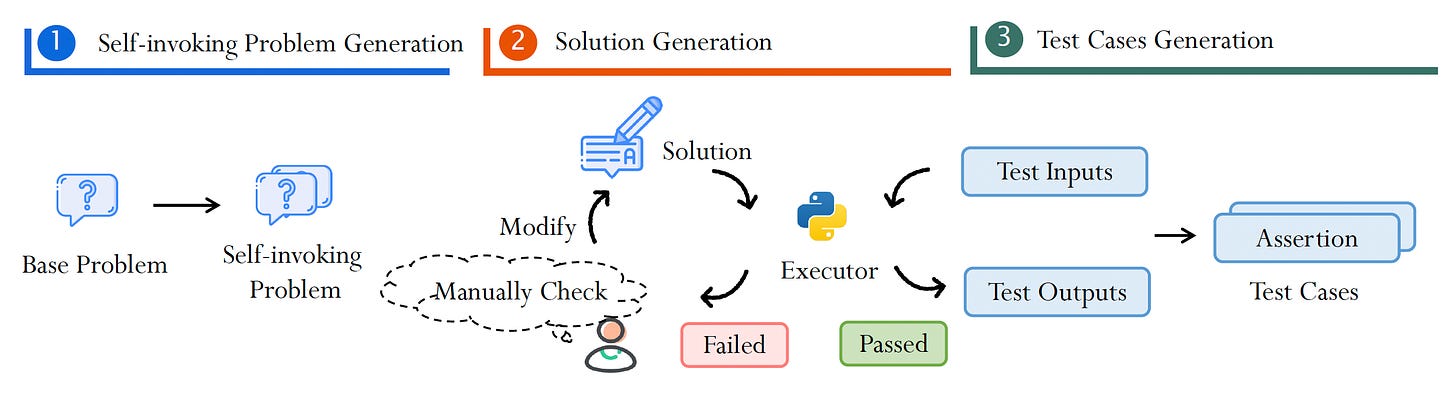

The researchers also propose a technique to automatically repurpose existing coding benchmarks for self-invoking code generation. This approach minimizes the need for manual code review and makes it easier to generate self-invoking coding examples with less effort.

HumanEval Pro and MBPP Pro are not the only advanced coding benchmarks. SWE-Bench evaluates models’ capabilities in end-to-end software engineering tasks that require a wide range of skills such as using external libraries and files, and managing DevOps tools. SWE-Bench is a very difficult benchmark and even the most advanced models are showing modest performance. It is also difficult to trace down the specific capabilities that models are missing when they fail at SWE-Bench tasks.

On the other hand, self-invoking code generation evaluates a very specific type of reasoning ability: using existing code within a module when solving problems. It is also closer to the kinds of use cases AI assistants have these days. Instead of expecting LLMs to solve entire pull requests in one go, developers are usually using them as sidekicks that help them complete specific coding tasks one step at a time. This makes it a very useful proxy for evaluating and choosing LLMs for real-world coding tasks.