How to teach LLMs to self-correct their answers

DeepMind has come up with an interesting method to force LLMs to use their internal knowledge to correct their mistakes.

When dealing with complicated tasks, LLMs might fail on the first try but get the correct answer if given the chance to review and correct their output (we humans often need to rethink and review our answers).

Self-correction has become a big topic of research lately, and many models are incorporating it into their inference process. A new paper by DeepMind introduces Self-Correction via Reinforcement Learning (SCoRe), a technique that enables LLMs to use their intrinsic knowledge and self-generated data to correct their answers.

SCoRe is a replacement for current self-correction techniques, which usually assume that they have access to external tools and oracles to verify their answers.

This is problematic for two reasons: 1) In real world applications, the model does not always have access to an oracle to verify its answers, and 2) the model often already has the knowledge required to self-correct its answers and just needs to be nudged in the right direction.

Moreover, some of the experiments the DeepMind team did with other self-correction techniques that rely on supervised fine-tuning (SFT) show that they cause the model to optimize for the final response instead of learning the intermediate steps of self-correction.

SCoRe trains a single model to both generate responses and correct its own errors without relying on external feedback. Importantly, SCoRe achieves this by training the model entirely on self-generated data, eliminating the need for external knowledge.

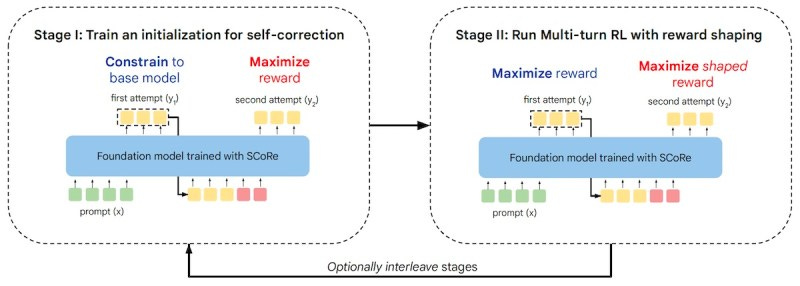

SCoRe uses a two-stage training process. The first stage optimizes the model’s correction performance while ensuring that the model's initial attempts remain close to the original output. This prevents the kind of collapse seen in other techniques.

The second stage employs multi-turn RL to optimize reward at both the initial and subsequent attempts, while incorporating a reward bonus that encourages the model to improve its responses from the first to the second attempt.

Experiments show that SCoRe significantly improved the self-correction capabilities of Gemini 1.0 Pro and 1.5 Flash models. SCoRe beats both the base models and other self-correction techniques on MATH and HumanEval by a solid margin. It also reduces a common error seen in self-correction methods: changing a correct answer to an incorrect one during the revision process.

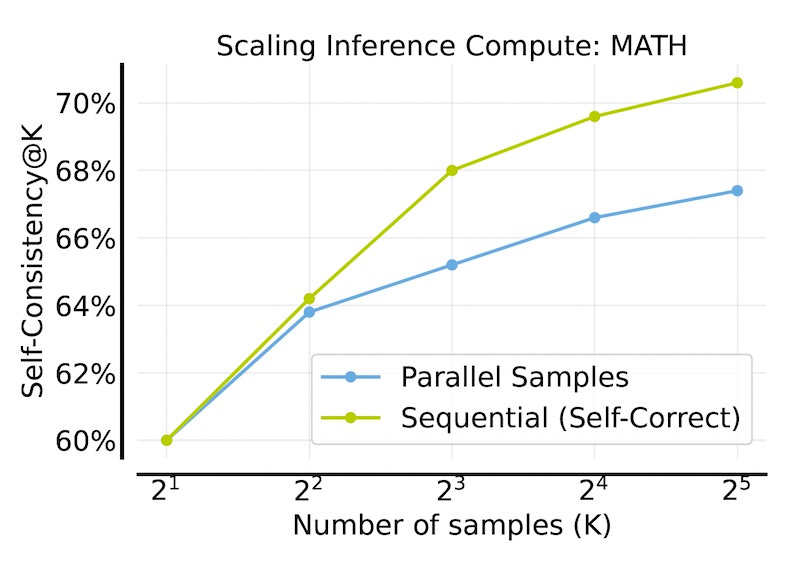

SCoRe can also be combined with inference-time scaling strategies such as self-consistency to get better results with a fixed compute budget.

Read more about SCoRe and my interview with the lead author on VentureBeat

Read the paper on arXiv

Hi Ben, will it be possible to provide a few self-correction samples, maybe in another article? mostly to see the process you take.