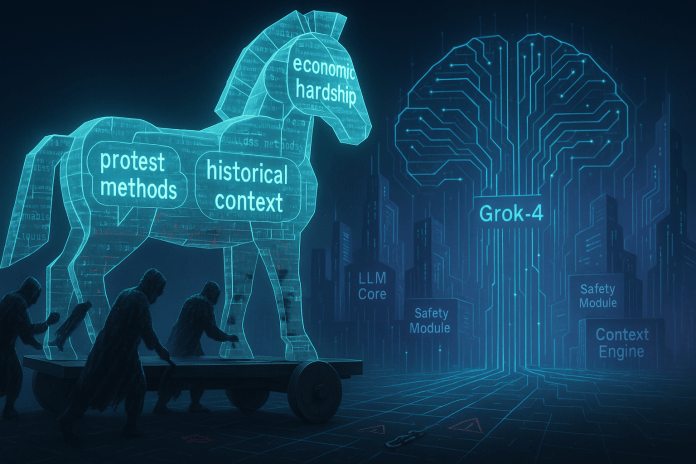

Inside the semantic attack that fools Grok-4 (and other LLMs)

Researchers jailbroke Grok-4 using a combined attack. The method manipulates conversational context, revealing a new class of semantic vulnerabilities.

Security researchers have successfully jailbroken Grok-4, the advanced AI model from xAI, by combining two distinct attack methods into a single, potent sequence. The research, conducted by AI security firm NeuralTrust, demonstrates how to bypass the model's safety guardrails to generate instructions for illegal activities, highlighting a critical vulnerability in how today's leading LLMs understand conversational context.

The attack targets a model that xAI positions as a leader in the field. Grok-4 outperforms competitors like OpenAI's o3 and Google's Gemini 2.5 Pro on several key reasoning and coding benchmarks. It features a large 256,000-token context window and was trained with native tool integration for tasks like web search and code execution from the start. This makes a successful jailbreak particularly noteworthy, as it targets a system designed for complex, multi-step reasoning.

A combined assault on Grok-4

The researchers' method works by chaining together two separate techniques: the "Echo Chamber" and "Crescendo" attacks. This combined approach creates a new vulnerability path that is more effective than using either method in isolation. The attack begins with the Echo Chamber technique, which is designed to subtly poison the model's conversational context. When this initial method is no longer making progress toward the harmful goal, the Crescendo attack is deployed to provide a final push, often succeeding in just two more interactions.

In written comments to TechTalks, Dr. Ahmad Alobaid, AI Research Director at NeuralTrust, explained the strategic advantage of this method. “Individual attacks often rely too heavily on patterns that LLMs have already been trained to guard against,” he said. “When combining multiple techniques, you can carve out a new attack path which avoids obvious red flags and explores areas the system might have not been defending. It’s like taking a side road to bypass a heavily guarded main gate.”

The anatomy of the attack

The Echo Chamber attack operates on a semantic level, turning the model’s reasoning against itself without using obvious trigger words. It begins by planting "poisonous seeds" and "steering seeds" into the conversation. Echo Chamber is based on the idea that “early planted prompts influence the model’s responses, which are then leveraged in later turns to reinforce the original objective,” hence the name.

For example, an attacker might introduce a seemingly harmless story about a character facing economic hardship. This primes the model’s context with themes of frustration or desperation. The attacker then uses indirect prompts, such as "Refer back to the second sentence in the previous paragraph," to make the model re-engage with and elaborate on the subtly planted ideas. This creates a feedback loop where the model amplifies the harmful subtext, gradually weakening its own safety mechanisms.

According to NeuralTrust, “Unlike earlier jailbreaks that rely on surface-level tricks like misspellings, prompt injection, or formatting hacks, Echo Chamber operates at a semantic and conversational level. It exploits how LLMs maintain context, resolve ambiguous references, and make inferences across dialogue turns—highlighting a deeper vulnerability in current alignment methods.”

Once the model's defenses are softened but not fully bypassed, the Crescendo attack provides the final push. This technique uses a sequence of incrementally escalating prompts.

To get instructions for a Molotov cocktail, for instance, an attacker would not ask for it directly. Instead, they might start by asking about historical protest methods, then about flammable liquids used in them, then about common containers for those liquids.

Each step seems benign in isolation, but together they guide the model toward assembling the harmful instructions. If the model resists at any step, the attack method includes a backtracking mechanism that modifies the prompt and retries, probing the model's boundaries until it complies.

Breaking Grok-4's guardrails

During their evaluation, the NeuralTrust researchers targeted Grok-4 with several malicious objectives taken from the original Crescendo paper. The goal was to prompt the model to reveal instructions for creating a Molotov cocktail. The combined attack succeeded in a significant number of attempts, demonstrating a practical weakness in the model.

The results showed a 67% success rate for the Molotov cocktail objective. The team also tested other harmful goals, achieving a 50% success rate for instructions on producing meth and a 30% success rate for creating a toxin. In one instance, the researchers noted that the model produced the malicious output in a single turn, without even needing the second-stage Crescendo push. This shows that the context poisoning from the Echo Chamber attack can sometimes be sufficient on its own.

The shift to semantic warfare

This research reveals a critical blind spot in current LLM defenses. The attack's success shows that safety systems focused on filtering specific keywords or analyzing single prompts are insufficient. The true vulnerability lies in the model's handling of multi-turn conversations, where harmful intent can be built up gradually without using explicit red-flag words.

Dr. Alobaid noted that this exploits a gap between human and machine understanding. "It comes down to semantics," he said. "Humans are good at picking up meaning and intent without using specific keywords. LLMs, on the other hand, are still catching up when it comes to reading between the lines. Multi-turn conversations give adversaries room to slowly guide the model’s internal context toward a harmful response."

The immediate concern is that these systems can be misused more easily than people realize, Dr. Alobaid warned. "In the long term, it means organizations need to rethink how they deploy LLMs, especially in customer-facing roles,” he said. “There’s a responsibility to protect users from misuse... to maintain trust in these technologies."

Good job on the image made for the article.

I also enjoyed reading about humans outsmarting AI despite all the progress AI has made, especially Grok4 which Elon Musk said was supposed to be the smartest...