Membership inference attacks

One of the wonders of machine learning is that it turns any kind of data into mathematical equations. After training a machine learning model on the training dataset, you can then discard the training data and publish the model on GitHub or run it on your own servers without worrying about storing or distributing sensitive information contained in the training dataset.

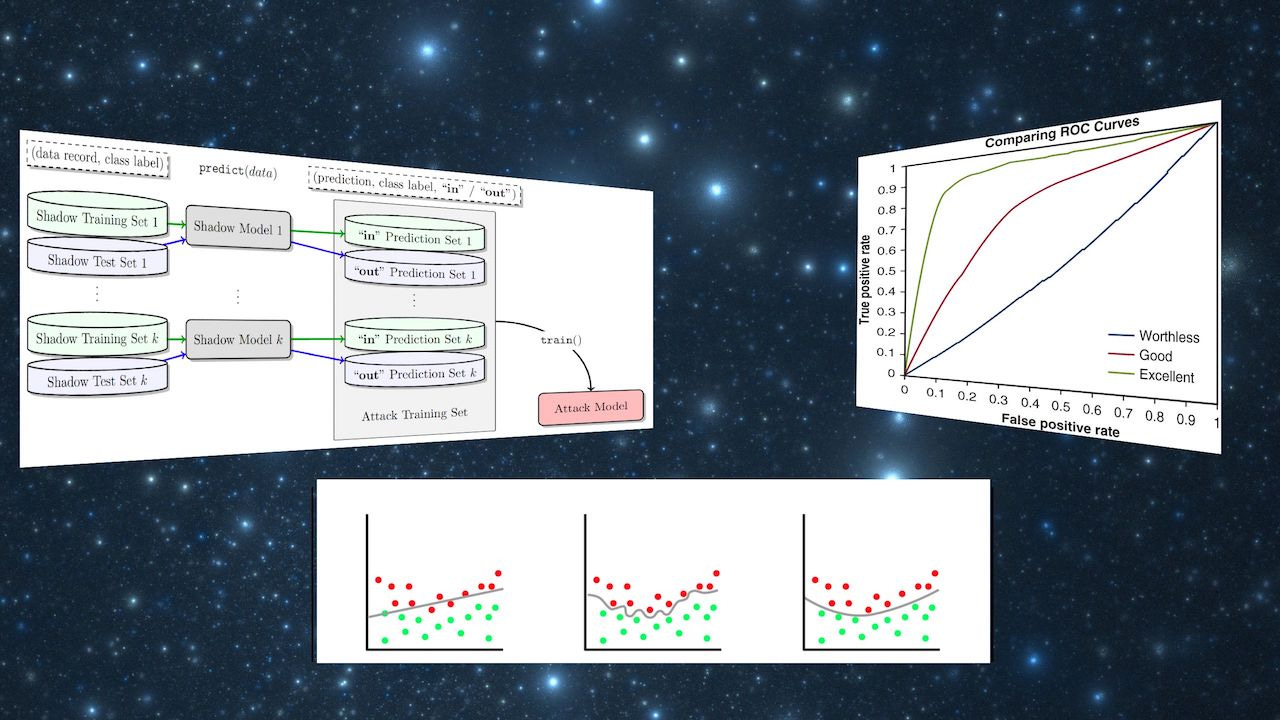

But a type of attack called “membership inference” makes it possible to detect the data used to train a machine learning model. In many cases, the attackers can stage membership inference attacks without having access to the machine learning model’s parameters and just by observing its output. Membership inference can cause security and privacy concerns in cases where the target model has been trained on sensitive information.

In my latest column on TechTalks, I discuss how membership inference attacks work and how you can protect yourself against them.

More explainers on artificial intelligence: