Meta has released Llama 4—here's what you need to know

Meta releases Llama 4, a potent suite of LLMs challenging rivals with innovative multimodal capabilities. Are they the future or just hype?

Meta has just released Llama 4, its most powerful family of open-weight large language models (LLMs). Llama 4 matches many state-of-the-art LLMs in key benchmarks and is the largest series of models Meta has released yet.

Llama 4 comes as AI labs are speeding up their release cycles (partially due to the panic caused by DeepSeek-R1), with Grok 3, Claude 3.7 Sonnet, GPT-4.5, and Gemini 2.5 Pro being released within a short period. Here’s what to know about Llama 4.

What is Llama 4?

Llama 4 is a family of LLMs, which Meta refers to as a “herd of models.” It comes in three sizes: Behemoth (2 trillion parameters), Maverick (400 billion parameters), and Scout (109 billion parameters). Meta has released Maverick and Scout but not Behemoth.

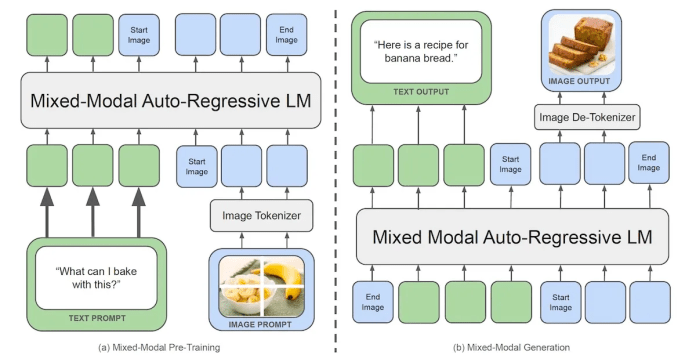

The models are multimodal, which means they support text, images, and videos as input. This is the first Llama model that is natively multimodal, a concept that Meta had alluded to in its Chameleon paper. Previous versions used different components to process text and images and to integrate them during inference. This version uses “early fusion,” which means that the model immediately combines the information from both modalities into a single, unified representation.

“Early fusion is a major step forward, since it enables us to jointly pre-train the model with large amounts of unlabeled text, image, and video data,” according to Meta. Moreover, this architecture allows the model to seamlessly reason over interleaved image and text sequences, enabling it to learn richer representations that take into account how text and images are placed next to each other.

Llama 4 mixture-of-experts architecture

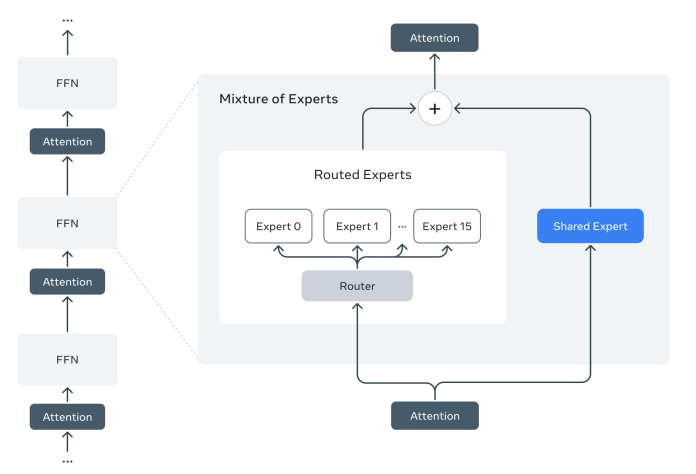

Llama 4 models are based on the mixture-of-experts (MoE) architecture, a technique for scaling models efficiently. Instead of one massive neural network that activates on each input, MoE models divide their parameters into many smaller "expert" networks that specialize in a range of skills. A “router” sends each token only to a few experts that are relevant to the task. This makes the inference process less compute-intensive. This is the first version of Llama that is based on the MoE architecture.

Llama 4 uses the concept of “shared expert,” also used in DeepSeek-V3 and R1. This means that one expert is always active and handles basic knowledge that is relevant to all tasks. The rest of the experts are selected based on the context and current input token.

The number of experts in each of the models is as follows:

Llama 4 Behemoth: 2T parameters, 16 experts, 288B active parameters

Llama 4 Maverick: 400B parameters, 128 experts, 17B active parameters

Llama 4 Scout: 109B parameters, 16 experts, 17B active parameters

According to Meta, Scout fits on a single H100 GPU with Int4 quantization while Maverick fits on a single H100 host such as the Nvidia H100 DGX server. Meta also used Llama 4 Behemoth as a “teacher” model, which means they use its outputs to train smaller models (also called “model distillation”).

One interesting detail in Meta’s blog is this: “We developed a new training technique which we refer to as MetaP that allows us to reliably set critical model hyper-parameters such as per-layer learning rates and initialization scales. We found that chosen hyper-parameters transfer well across different values of batch size, model width, depth, and training tokens.”

Basically, this means that they figured out a way to run experiments on small models and transfer the same hyperparameters to the larger models. This can help significantly increase the speed and reduce the costs of training the larger models.

How does Llama 4 compare to other models?

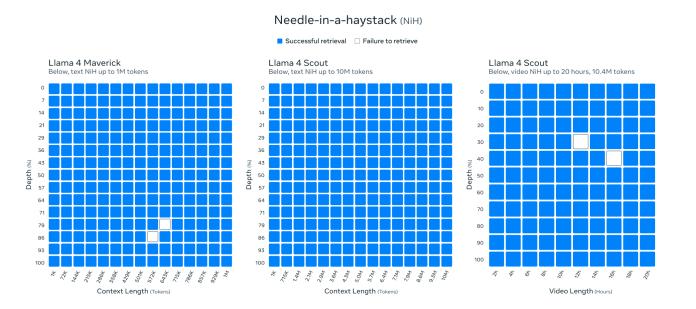

One of the key advantages of Llama 4 is its context window. Scout has a 10-million-token context window, which is the longest in any open or closed model, and Maverick supports up to one million tokens, which matches the context window of Google’s Gemini models. (The context window of Behemoth has not been specified.)

According to Meta, Scout passes the “needle in a haystack” test on videos of up to 20 hours (needle-in-a-haystack tests a model’s capability to extract a specific piece of data from its input).

According to the figures shared by Meta, the Llama 4 models match or surpass their open and closed counterparts on key benchmarks. For example, Llama 4 Scout outperforms Gemma 3, Mistral 3.1, and Gemini 2.0 Flash-Lite on coding, reasoning, long context, and image benchmarks (one thing to note: Gemma 3 and Mistral 3.1 are smaller than Llama 4 Scout, though they have more active parameters).

Llama 4 Maverick is comparable or superior to Gemini 2.0 Flash, DeepSeek V3.1, and GPT-4o. And Llama 4 Behemoth is comparable to Gemini 2.0 Pro, Claude 3.7 Sonnet, and GPT-4.5.

It is worth noting that Meta did not include comparisons to leading reasoning models such as OpenAI o1 and o3 or Google Gemini 2.5 Pro. It also left out benchmarks where other models such as DeepSeek V3.1 outperform Llama 4, as some users have pointed out on social media.

However, in my experience, benchmarks only give you a general idea of where a model stands in the respective category of tasks. You need to run experiments on tasks that interest you to find out how useful a model is. (I will share my experiments after I get access to the model.)

How to access Llama 4

Meta has released the weights for Llama 4 Maverick and Scout on Hugging Face and llama.com, but you need to fill out a request form to get access to them. But one of the main problems with the Llama 4 family is that even the smallest one can only run on large AI accelerators. Unlike the previous versions of Llama, there is no longer a variant that can run on your MacBook or consumer-grade GPU. This makes it a bit confusing to understand where exactly the Llama 4 family sits.

However, since it is open and released on Hugging Face, we can expect to see smaller, distilled versions of the models emerge soon.

In the meantime, if you don’t have your own GPU cluster (which most people don’t) and don’t want to pay the heavy price of renting them online, you can access hosted version of Llama 4 on cloud platforms such as Groq, Amazon Web Services (Sagemaker and Bedrock), Microsoft Azure, Google Cloud, and Databricks.