Meta is making an aggressive entrance in humanoid robotics

A tactile sensing model, a finger-tip sensor, and a robotic hand platform, all released on one day.

Meta has made three big announcements for robotics at a time when the AI race is expanding to new fields, including humanoid robots.

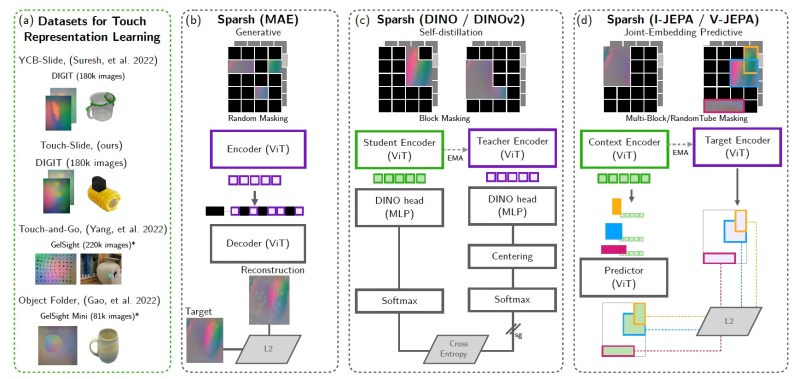

First is Sparsh, a family of encoder models for vision-based tactile sensing. These models enable robots to distinguish the material they are touching and, for example, how much pressure can be applied to a certain object to avoid damaging it.

What makes Sparsh special is its generalizability to different tasks and sensors. The classic approach to incorporating vision-based tactile sensors in robot tasks is to use labeled data to train custom models for each sensor and task. In contrast, Sparsh uses self-supervised learning to train a single model for different sensors and tasks without the need for manual labels.

Sparsh uses the general idea behind encoder-decoder models, which is to reconstruct the missing parts of an input sequence and comparing it with the ground truth. The researchers created different variants of Sparsh based on various model architectures. All of them outperform the sensor-specific models by a wide margin.

The second announcement is Digit 360, a finger-shaped tactile sensor with more than 18 sensing features and over 8 million taxels. Digit 360 captures various sensing modalities and has on-device AI models that can make real-time inferences about the sensor’s state without the need to send data to the cloud.

While there will be plenty of uses for advanced tactile sensors in new robotics applications, the researchers also hint at the data and learnings obtained from the sensors helping in creating more immersive virtual experiences in the future.

Meta is also releasing Digit Plexus, a hardware-software platform for robotic applications that can integrate various fingertip and skin tactile sensors onto a single robot hand.

Meta is releasing the code and design for Digit 360 and Digit Plexus and is teaming up with industry partners to create the sensor and robotic hands.

Meta is also releasing PARTNR, a benchmark for evaluating the collaboration of AI models and humans on household tasks. PARTNR includes 100,000 natural language tasks in 60 houses, involving more than 5,800 unique objects.

There are growing signs that LLMs and VLMs can help improve robotic applications by acting as reasoning and planning modules that can handle complicated tasks that span over long horizons. With better sensors, models, and benchmarks, it will be interesting to see how far we can push the boundary of what’s possible.