Mistral releases new models in crowded SLM market

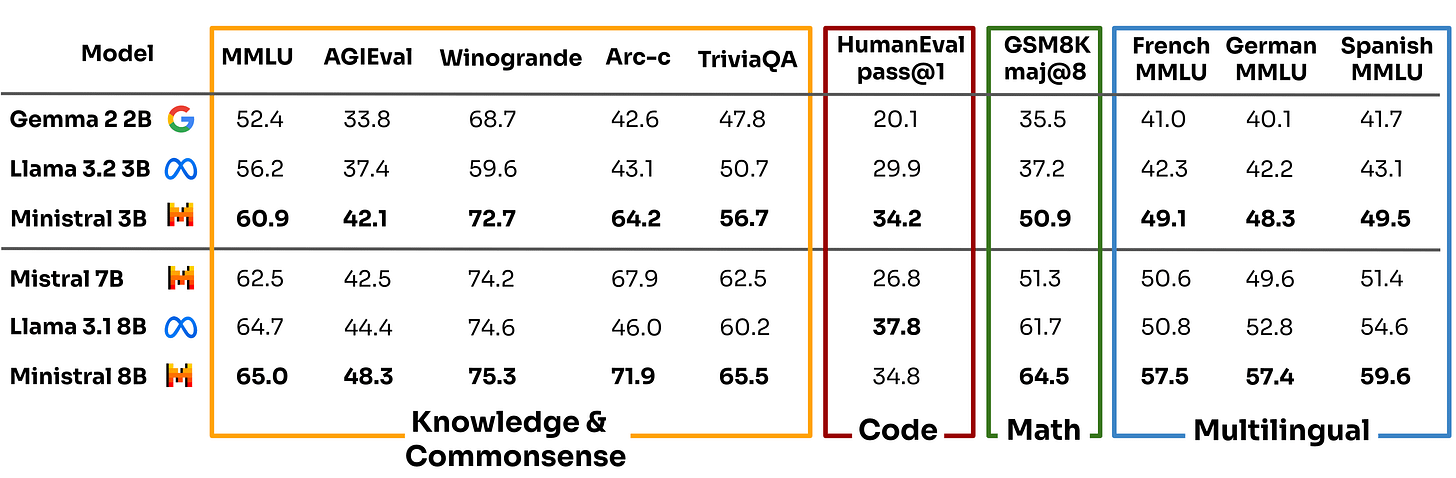

The new Ministral models outperforms other small language models, including Gemma 2, Phi 3.5, and Llama 3.2.

Paris-based AI company Mistral has just released a new family of small language models (SLM) called Ministraux. The models, released on the anniversary of the company’s first SLM, Mistral 7B, come in two different sizes, Ministral 3B and Ministral 8B.

The release comes as the market is already being flooded with other SLMs. Microsoft released Phi 3.5 models in August. Google is updating its Gemma 2 models regularly. And Meta recently released Llama 3.2 in September. All these model families have small versions that fit on edge devices.

According to Mistral, the new Ministral models outperform other SLMs of similar size on major benchmarks in different fields, including reasoning (MMLU and Arc-c), coding (HumanEval), and multilingual tasks.

Ministral has a 128,000-token context window, which means you can use it for long-context tasks, such as many-shot learning. The model comes in pre-trained and instruction fine-tuned versions. According to the company, the models are suitable for “on-device translation, internet-less smart assistants, local analytics, and autonomous robotics.” I’m particularly interested in seeing how it will fare in robotics tasks as researchers are increasingly interested in using LLMs as reasoning layers on top of robotics models.

Join Spectra 2024 virtually on October 23rd to hear from leaders at Particle, Qualcomm, Edge Impulse, tinyML Foundation, MLCommons, and Useful Sensors, who will equip you with the necessary tools to unlock the next wave of intelligent devices along with the insights you need to cut through the buzzwords and hype.

The downside of the models is their licensing. Mistral has released the weights for the Ministral 8B Instruct under a research license. The weights for the other models have not been released yet and the company’s special license has restrictions on commercial use.

The licensing highlights one of the key challenges of the field. AI labs spend millions of dollars to train their models. Releasing them under a permissive license such as Apache 2.0 would be like throwing away money. A company like Meta can afford those kinds of expenses, knowing that they will recoup the costs down the road as they integrate the models into their products. But for Mistral, which has yet to find a path to profitability, it will be trickier to release models.

What makes it even more challenging is that the field is moving very fast. Models released today will fast become deprecated, and the company will have to spend millions of dollars training the next generation of models, as shown in this graphic shared by Mistral with the release of the new models.

Nonetheless, it is nice to see Mistral holding its own against tech giants.

Don't miss your chance to learn from some of the most prominent names at the intersection of edge AI and IoT at Spectra 2024!