MIT introduces new RL technique that moves beyond binary rewards

AI models are often overconfident. A new MIT training method teaches them self-doubt, improving reliability and making them more trustworthy.

In a new paper, researchers at MIT introduce Reinforcement Learning with Calibration Rewards (RLCR), a training technique that addresses one of the key challenges of reasoning models: knowing how confident they are in their own answers.

This method helps models become more accurate when facing new types of problems and provides users with a more reliable signal to judge the validity of their outputs. By training models to reason about their own uncertainty, RLCR can lead to more trustworthy and robust AI systems.

The problem with binary rewards

Many of today's most powerful language models are trained using reinforcement learning (RL) to generate "chains of thought" (CoT) before providing a final answer. The standard approach, known as reinforcement learning with verifiable rewards (RLVR), uses a simple binary reward: the model gets a positive reward for a correct answer and a penalty for an incorrect one. This method is effective at improving accuracy on specific tasks.

However, this all-or-nothing approach has a critical limitation. It rewards a model equally whether it was confidently correct or just made a lucky guess. Likewise, it penalizes a model the same for a wild hallucination as for a near-miss. This setup encourages models to become overconfident, as there is no incentive to express doubt. In real-world applications, especially in high-stakes fields like medicine or finance, we need models that can provide not just an answer, but also a reliable measure of their confidence in that answer.

Studies show that even if they start out well-calibrated, language models tend to become more overconfident after this type of RL training. As the paper's authors note, “Reasoning models, in particular, tend to exhibit worsened calibration and increased hallucination rates compared to base models, particularly when trained with reward signals that emphasize only correctness.”

How RLCR works

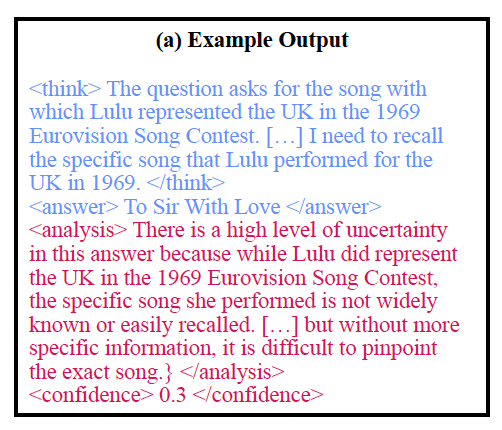

The main idea behind Reinforcement Learning with Calibration Rewards (RLCR) is to train models with a reward that incentivizes both correctness and good calibration. The approach encourages models to reason about their own uncertainty. During training, the model is prompted to produce its reasoning steps, a final answer, and a numerical confidence score. It is then trained to optimize a reward function that evaluates both the accuracy of its answer and the reliability of its confidence estimate.

To achieve this, the researchers augment the standard correctness reward with a Brier score. The Brier score is a method for evaluating the quality of a probability forecast. It penalizes a model when its stated confidence doesn't match the actual outcome. For example, a model is penalized heavily if it gives an incorrect answer with high confidence, but it is also penalized, albeit less, for giving a correct answer with low confidence. This dual incentive pushes the model to produce answers that are not only correct but are also accompanied by an honest assessment of their likelihood of being correct. The authors proved that this combined reward function is maximized when a model outputs the most likely answer along with a perfectly calibrated confidence score.

As the researchers state, “Our approach trains models to reason about and verbalize uncertainty, preserving task performance while significantly improving calibration in- and out-of-distribution.”

RLCR in action

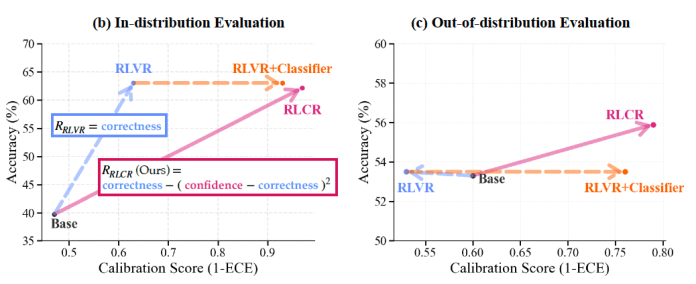

The researchers evaluated how RLCR changes model accuracy and calibration on both in-domain tasks (the same kind used for training) and out-of-domain tasks (new types of problems). They compared RLCR against several baselines, including a base pre-trained model, the standard RLVR method, and RLVR combined with a separate classifier trained just to predict confidence.

On in-domain factual and math reasoning tasks, RLCR matched the accuracy of standard RLVR but substantially improved calibration, drastically reducing calibration error. The more significant finding came from out-of-domain tests. While standard RLVR made models less calibrated on new tasks compared to the base model, RLCR substantially improved calibration, outperforming all other approaches. This shows that learning to reason about uncertainty makes models more reliable when they encounter unfamiliar problems.

RLCR also provides practical advantages for real-world applications. The verbalized confidence scores can be used at inference time to improve results; for instance, when generating multiple answers, a confidence-weighted majority vote is more accurate than a simple vote. The method also improves the self-consistency of the model's confidence estimates. For a given answer, repeated analyses yield similar confidence scores, which is crucial for building trust in the model's outputs. These benefits point toward more reliable and interpretable AI that can make better use of its computational resources.

As the authors conclude, "Together, these results show that existing reasoning training methods can be straightforwardly modified to additionally optimize for calibration, and that this improves in turn improves their accuracy, robustness, and scalability."

I wonder if this might have the side effect of reducing confabulations, if the need to provide any response at all is modulated by a low confidence in some random baloney?