Models that imitate ChatGPT have serious limits

Large language models (LLMs) like ChatGPT, Bard, and Claude are hard to replicate due to their black-box nature and little information we have about their architecture, weights, data, etc.

To overcome this limit, researchers are using "model imitation,” where they use the proprietary model to generate training data for another model. Small open-source LLMs can perform well with imitation learning, but there are limits. UC Berkeley researchers found that imitation models can learn ChatGPT's style but not its knowledge, which raises questions about the trustworthiness of imitation learning.

Key findings:

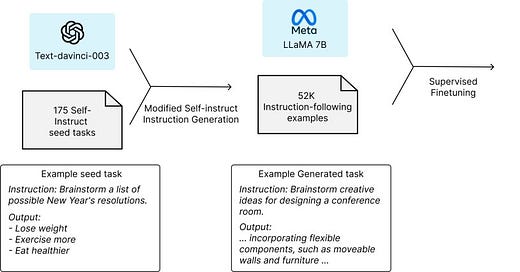

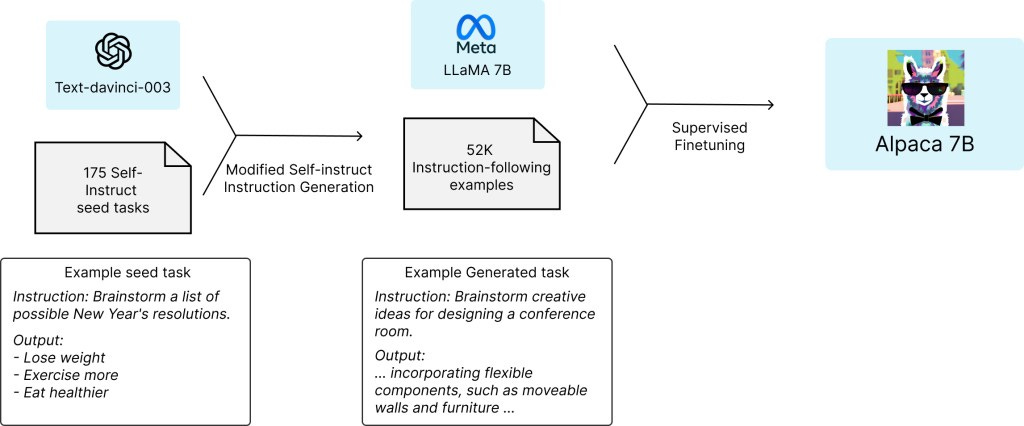

You can use the ChatGPT API to generate training data for your own model

One method to gather data is “self-instruct,” where you start with a seed of manually crafted prompt-response pairs and use ChatGPT to generate similar examples

Another method is to use open-source datasets and websites like ShareGPT, where users upload their prompts and responses

Model imitation has shown great results, as LLMs like Alpaca and Vicuna show—evaluators sometimes prefer the answers of the smaller models to ChatGPT and Bard

However, the research from UC Berkeley shows that these models only learn the style of ChatGPT, not its knowledge

Model imitation does not improve the model’s performance on specific task benchmarks

This shows that model imitation is not a replacement for techniques such as improving the base model through scale or better data curation

Read the full article on TechTalks.

For more on AI research: