New technique to create better datasets for self-supervised learning

New technique by Meta and Google automatically curates training data for self-supervised learning through embedding models and hierarchical k-means clustering.

Self-supervised learning (SSL) relieves you of the pain of labeling thousands and millions of training examples. But SSL also relies heavily on having well-balanced and diverse datasets. How can you ensure that your training data has those characteristics without doing a lot of manual curation?

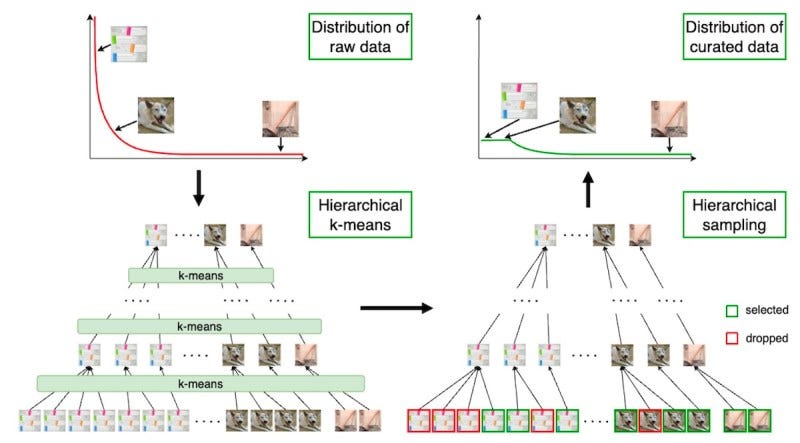

A new paper by researchers at Meta and Google presents a new automated data curation technique that takes in raw data (e.g., gathered from the internet) and creates a well-balanced dataset that is not skewed toward any particular concept.

The technique uses embedding models and hierarchical k-means clustering to detect concepts at different levels. The algorithm uses unlabeled data and determines concepts based on the similarity of the embedding values of the examples. At each stage, the algorithm samples examples in a way that makes sure no specific concept is over- or under-represented in the training data.

Experiments show that foundation models trained on datasets curated through this technique perform nearly on par with datasets curated through hundreds and thousands of hours of manual curation.

Moreover, SSL models trained with this technique achieve state-of-the-art performance with much fewer examples than the best machine learning models.

Self-supervised ML models are usually fine-tuned for downstream tasks through supervised fine-tuning, where they are trained on a small dataset of labeled examples. When the quality of the SSL process is very high, the model generalizes well to out-of-distribution examples and can be fine-tuned for new applications with much fewer labeled examples. This is one of the great promises of this technique and its early results.

This can be a game-changer for industries that don't have access to well-curated data as well as tech giants such as Meta and Google, which are sitting on oceans of raw data that have not been prepared for training ML models.

The researchers have not yet released the code but have set up a GitHub page where the code will soon be released. It will be interesting to see how the community builds on it.

Read more about the technique on VentureBeat.

Read the paper on Arxiv.