Nvidia shows the power of pruning and distillation with Llama-3.1-Minitron 4B

Llama-3.1-Minitron 4B uses model pruning and distillation to create a small language model (SLM) at a fraction of the base cost.

Nvidia has released Llama-3.1-Minitron 4B, a small language model (SLM) that is a fraction of cost of training models from scratch while remaining competitive with equally sized models.

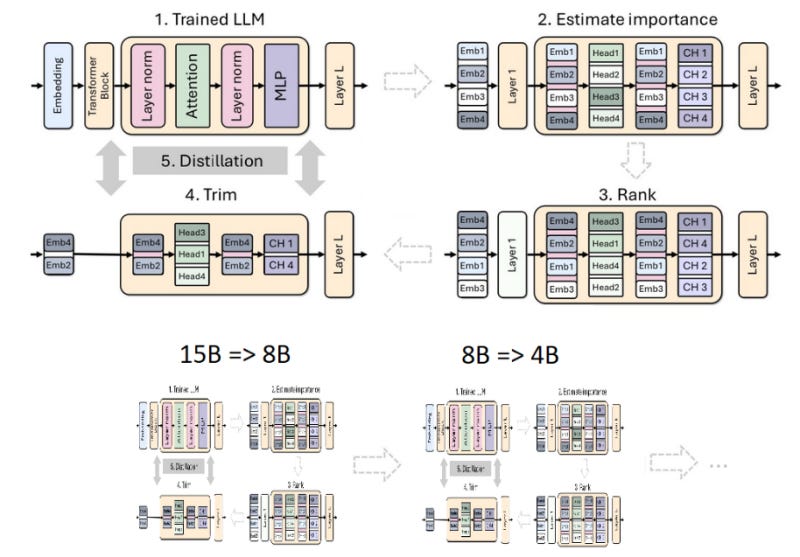

Nvidia leveraged its previous research into pruning and distillation. Their previous work shows that with the right configuration of pruning and distillation, you can create SLMs with 40X fewer tokens than training the models from scratch while reaching equal benchmark performance.

To create the Llama-3.1-Minitron 4B, the researchers started from the Llama-3.1-8B model. After doing a small 94B-token fine-tuning on the base model, the researchers applied two pruning techniques to create two 4B models: “Depth-only pruning,” where they removed 50% of the layers, and “width-only pruning,” where they removed 50% of the neurons from some of the dense layers in the transformer blocks.

They then used “classic knowledge distillation” to transfer knowledge from the base model to the pruned model, where the smaller model is fine-tuned on the inner activations of the teacher model.

Finally, they fine-tuned the pruned models using NeMo-Aligner, a toolkit that supports various alignment algorithms such as reinforcement learning from human feedback (RLHF), direct preference optimization (DPO), and Nvidia’s own SteerLM.

Their experiments show that despite its small training corpus, Llama-3.1-Minitron 4B performs close to other SLMs such as Phi-2 2.7B, Gemma2 2.6B, Qwen2-1.5B. It is worth noting that Llama-3.1-Minitron 4B is at least 50% larger than those models.

But those models have been trained on trillions of tokens, which is much more expensive. So you now have an interesting new dynamic, where you can pay less for training in exchange of higher memory costs during inference.

The team has released the width-pruned version of the model on Hugging Face under the Nvidia Open Model License, which allows for commercial use.

This is another reminder of the value of the open-source community and sharing models and research.

Read more about Llama-3.1-Minitron 4B on VentureBeat

Read the technical report on Nvidia’s blog

Read the pruning and distillation study on arXiv