OpenAI's open source play: how gpt-oss aims to reset the AI market

The new gpt-oss open-weight models undercut OpenAI's own closed LLMs, marking a strategic pivot designed to reshape the competitive AI market.

OpenAI has released gpt-oss, its first open-weight language model series since GPT-2 in 2019. The release includes two text-only models, gpt-oss-120b and gpt-oss-20b, which are available under the permissive Apache 2.0 license. The larger model delivers performance that achieves near-parity with OpenAI’s own o4-mini closed model, signaling a significant strategic pivot that directly challenges the existing AI market and the company's own business model. This move validates the growing influence of the open-source ecosystem, which has seen a surge of powerful models from international competitors.

Under the hood of gpt-oss

Both gpt-oss models are built on a Transformer foundation that uses a Mixture-of-Experts (MoE) architecture, a design that has become increasingly standard for efficient frontier models (also used in other popular models such as DeepSeek-R1). An MoE model consists of numerous smaller "expert" sub-networks. For any given input, the model intelligently routes it to only a few relevant experts instead of engaging the entire network. This approach drastically reduces the computational load for inference.

The larger gpt-oss-120b contains 117 billion total parameters, 128 experts, and four active experts and 5.1 billion active parameters per token. The smaller gpt-oss-20b has 21 billion total parameters, 32 experts, and activates four experts and 3.6 billion per token. Both models support a 128,000-token context length and use techniques like grouped multi-query attention (MQA) and Rotary Positional Embeddings (RoPE) for efficiency.

OpenAI also open-sourced its tokenizer, o200k_harmony, alongside the models. However, while the weights are open, the release is not fully open source in the purest sense. It lacks the training data, training code, and the original base models, limiting its utility for researchers who seek to replicate the training process or deeply modify the architecture.

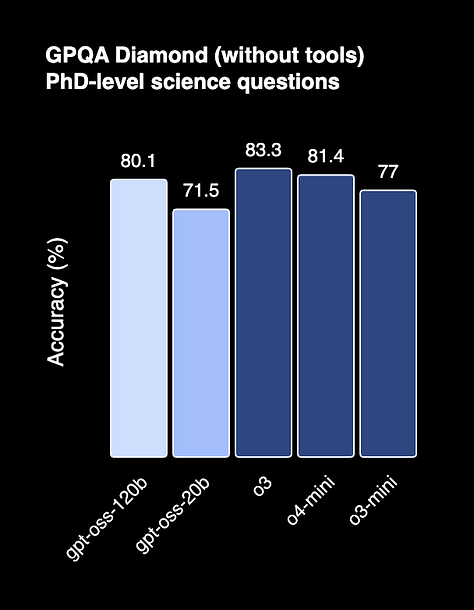

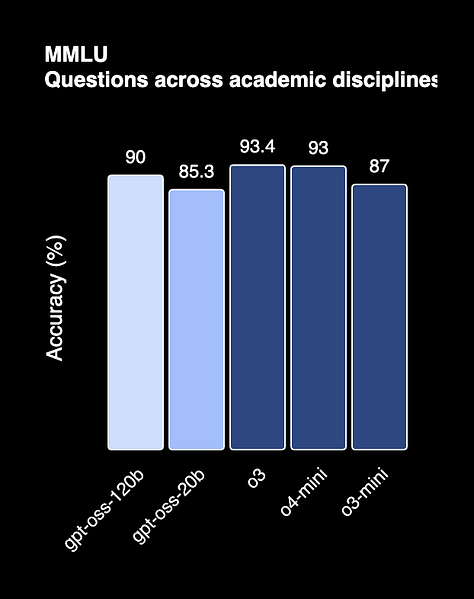

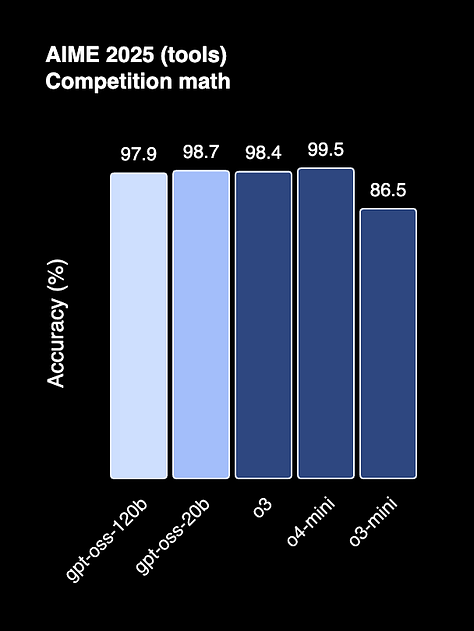

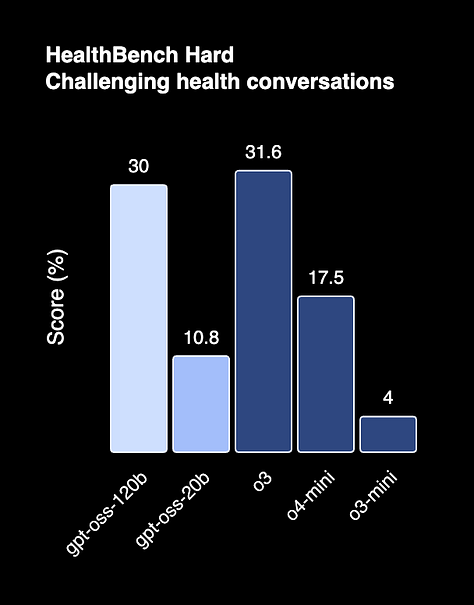

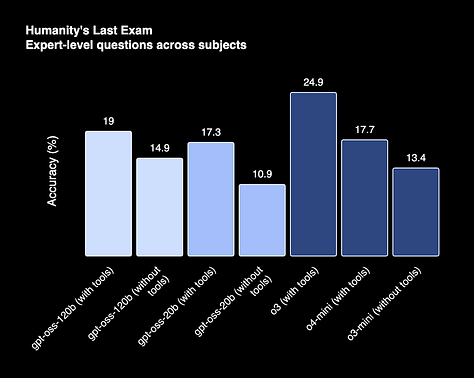

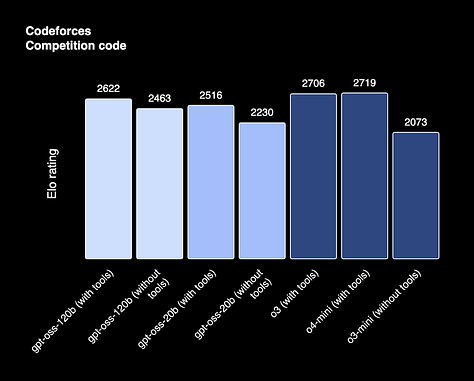

A new open-source performance benchmark

On key benchmarks, gpt-oss-120b matches or exceeds OpenAI's proprietary o4-mini in areas like general problem-solving (MMLU), tool use (TauBench), and the famously difficult benchmark Humanity’s Last Exam. It performs even better on competition mathematics (AIME 2024 & 2025) and health-related queries (HealthBench). The smaller gpt-oss-20b is comparable to o3-mini, making it a strong candidate for local and on-device applications.

The models are designed for agentic workflows with strong instruction following and tool use, such as web search and code execution. However, developers seeking multimodal capabilities and built-in tools must still turn to OpenAI’s API platform, as gpt-oss is strictly text-only.

Permissive licensing and broad accessibility

The decision to use the Apache 2.0 license makes gpt-oss highly attractive for commercial use. It places no restrictions on revenue generation or modification, unlike Meta's Llama license, which requires a separate commercial agreement for services with over 700 million monthly active users.

This licensing enables enterprises in highly regulated fields like finance and healthcare to deploy the models on their own hardware, ensuring data privacy. The models are also quantized and optimized for efficient deployment: gpt-oss-120b can run on a single 80GB GPU, while the 20B model requires just 16GB of memory.

To accelerate adoption, OpenAI has partnered with a wide range of platforms, including Azure, AWS, and Hugging Face, and hardware providers like NVIDIA and AMD.

OpenAI's strategic calculus

This release appears to be a calculated response to several market pressures. One key driver is the need to compete with a thriving open-source ecosystem, particularly high-performing models from Chinese firms like DeepSeek and Alibaba. In fact, OpenAI first declared intentions to release an open model after the release of DeepSeek-R1.

The release of gpt-oss is clearly undercutting a significant part of OpenAI’s market, especially for its non-frontier models. For example, the cost of running gpt-oss will be much lower than its closed equivalent o4-mini. This can cannibalize some of OpenAI’s own revenue base. However, there are already several other open models that match the performance of non-frontier models (e.g., Kimi-K2, GLM-4.5). And OpenAI executives acknowledged that many of their API customers already use a mix of paid OpenAI models and open-source alternatives.

gpt-oss is a clear effort to bring those users back into the OpenAI fold and make sure they remain within the family of models that will be compatible with future closed models that the AI lab releases.

In the cutthroat AI space, releasing open models that cost hundreds of millions of dollars to train is certainly not an act of charity.