Optimize LLM inference with "attention offloading"

One of the most interesting papers I've reviewed in a while.

What do you do when you can't get your hands on a bunch of A100s and H100s for LLM inference? You combine them with consumer-grade GPUs so that you can distribute operations to the most suitable type of hardware.

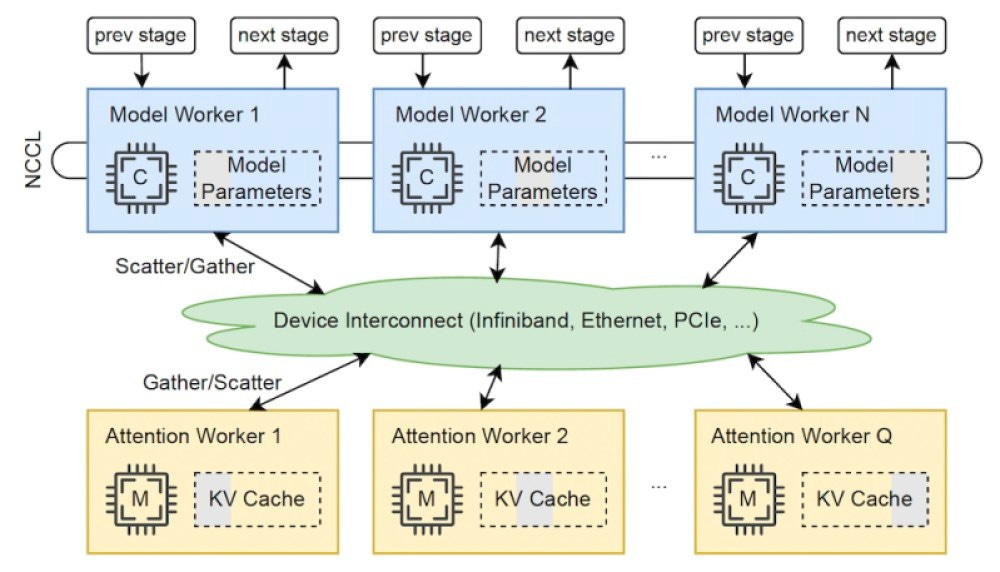

"Attention offloading," a new technique developed by researchers at Tsinghua University, creates a heterogeneous hardware stack composed of high-end accelerators (e.g., H100) and consumer GPUs (e.g., RTX 3090).

Attention offloading banks on the idea that LLM inference operations can be divided into compute-intensive and memory-intensive. It then distributes operations across the hardware stack in a way that makes the best use of the strengths of each type of accelerator.

Specifically, KV cache and attention operations are more dependent on GPU memory rather than very fast compute. And their memory consumption increases as the input sequence to the LLM grows. So attention offloading sends them to the consumer-grade GPUs, which can be purchased at a fraction of the cost of high-end accelerators. The A100s and H100s are then reserved for the model weights and other operations of the LLM inference.

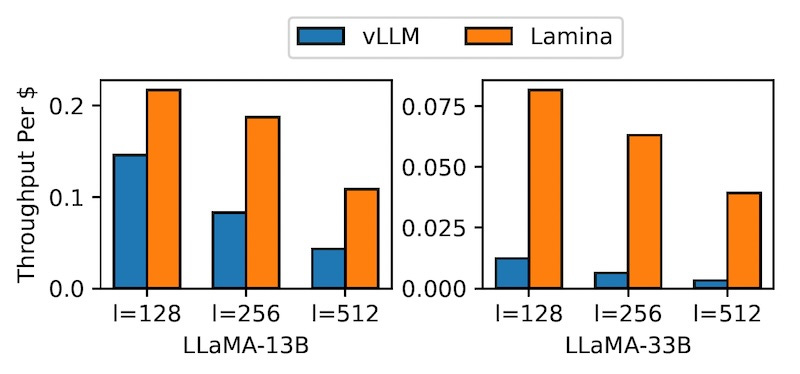

The benefits of attention offloading become more accentuated as the size of the input and inference batch grows.

Experiments show that, at an equal budget, attention offloading can give you several times more throughput than a homogeneous stack that is only composed of high-end accelerators.

One of the most interesting papers I've reviewed in a while.

Read my review of the paper here.

Read the original paper here.

Great paper. I wonder if it can be implemented effectively between GPUs made by different vendors (e.g., AMD and Nvidia).