Qwen3 is another win for open LLMs

Alibaba's Qwen3 open-weight LLMs combine direct response and chain-of-thought reasoning in a single architecture, and compete withe leading models.

Alibaba has just released Qwen3, the latest generation of its flagship large language model (LLM) family. This release is noteworthy for several reasons: it includes models that deliver highly competitive performance against leading LLMs on key, and is available under a permissive license, making it useful for a wide range of applications. A standout feature is its novel hybrid approach that packs both direct and reasoning-based inference in the same model, making it a first for open LLMs.

What is Qwen3?

Qwen3 is a family of LLMs comprising eight distinct models released under the permissive Apache 2.0 license, allowing for widespread use and modification. These fall into two main categories:

First, there are two Mixture-of-Experts (MoE) models: the flagship Qwen3-235B-A22B, which has 235 billion total parameters but only activates 22 billion during inference, and a smaller Qwen3-30B-A3B, with 30 billion total and 3 billion activated parameters. MoE architectures are designed for efficiency; they consist of many "expert" sub-networks but only engage a fraction of them for any given task, reducing computational cost compared to activating the entire model. Qwen3 uses the more modern approach to MoE, with multiple active experts in each pass (8 of 128), enabling the model to learn more complex interactions between the experts during training (this is the approach used in Llama 4 and DeepSeek-V3).

The release also includes six "dense" models, where all parameters are active during inference. These range in size from Qwen3-32B down to Qwen3-0.6B, providing options suitable for various hardware capabilities and application needs.

Perhaps the most intriguing aspect of Qwen3 is its hybrid approach to problem-solving. The models support two distinct operational modes:

Thinking mode: When faced with complex problems requiring step-by-step reasoning (like math problems or intricate coding tasks), the model can operate in this mode. It takes more time to generate a chain-of-thought (CoT) before providing a final answer.

Non-Thinking mode: For simpler queries, the model can provide direct responses without engaging in extended deliberation.

Claude 3.7 Sonnet was the first model to provide this kind of hybrid inference. Qwen3 is the first major open model to provide a similar structure along with the rough recipe of how they achieved it.

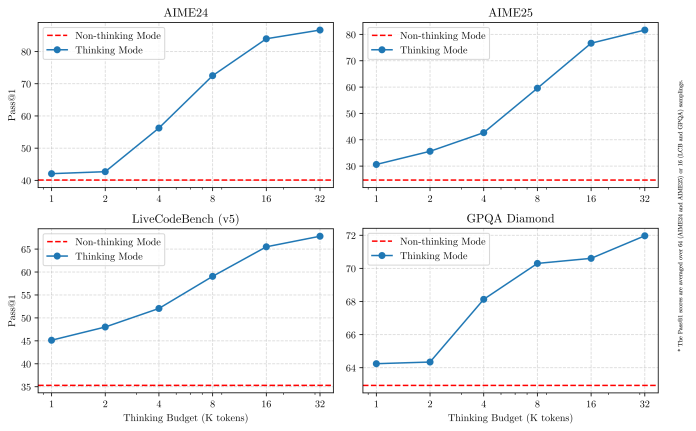

This dual-mode makes resource allocation more efficient and improves the user experience. Whereas with other model providers, you have to choose between a wide range of confusingly named LLMs, with Qwen3, you can easily switch between thinking and non-thinking mode by toggling a switch in your prompt. Moreover, users can define a “thinking budget” to determine how many tokens the model can spend on the CoT. This allows for a flexible balance between inference speed, cost, and the quality of the model's output. Alibaba notes that Qwen3 shows smooth performance improvements directly correlated with the allocated reasoning budget, making it easier to configure task-specific cost-performance trade-offs.

Capabilities of Qwen3

Qwen3 has been trained to support 119 languages and dialects, spanning families from Indo-European and Sino-Tibetan to Afro-Asiatic and Turkic.

The models have also been optimized for coding tasks and agentic applications. They also support Model Context Protocol (MCP), making it easier to integrate them with external tools based on the widely adopted standard. Examples shared by the Qwen3 team shows the model seamlessly using multiple tools during inference, including code interpreter and search engine.

Benchmark results show Qwen3 models performing competitively. The large Qwen3-235B-A22B rivals other top-tier models like Gemini-2.5-Pro and Grok-3 in areas like coding and math. The smaller dense models often match the performance of larger models from the previous Qwen2.5 generation (e.g., Qwen3-4B rivals Qwen2.5-72B-Instruct). The MoE models achieve performance comparable to much larger dense models while using only a fraction of the active parameters.

Qwen3 training process

The capabilities of Qwen3 are built upon a foundation of extensive training data and a sophisticated multi-stage training process.

For pre-training, Qwen3 was trained on approximately 36 trillion tokens of data, nearly double the amount used for its predecessor, Qwen2.5. This dataset includes content gathered from the web and extracted from PDF-like documents. The team used their Qwen2.5-Math and Qwen2.5-Coder models to generate math and code data.

The pre-training itself occurred in three stages. Initially, the models were trained on 30 trillion tokens using a 4K context window for basic language skills and general knowledge. The second stage focused on enhancing knowledge in STEM, coding, and reasoning by training on an additional 5 trillion tokens skewed towards these domains. Finally, high-quality, long-context data was used to extend the models' effective context length to 32K.

The post-training pipeline involved four stages to develop reasoning capabilities and integrate the hybrid thinking mode:

Long CoT cold start: Models were fine-tuned for foundational reasoning skills on examples including the CoT for reasoning tasks across math, coding, logic, and STEM problems.

Reasoning-based reinforcement learning (RL): The team used reinforcement learning techniques with rule-based rewards to improve the model's ability to explore complex reasoning paths effectively.

Thinking mode fusion: To integrate the fast, non-thinking mode, the reasoning-capable model from stage 2 was further fine-tuned on a mix of long CoT data and standard instruction-following data.

General reinforcement learning: Finally, RL was applied across over 20 general tasks (like following complex instructions, maintaining formats, and agentic behaviors) to refine overall abilities and minimize undesired outputs.

Where to access Qwen3

You can try Qwen3 through the Qwen Chat web interface. For developers and researchers, the post-trained models (like Qwen3-30B-A3B) and their base pre-trained versions are available on popular platforms such as Hugging Face, ModelScope, and Kaggle.

If you want to run a Qwen3 server, it is compatible with SGLang and vLLM. For local deployment, it is available on Ollama, LMStudio, MLX, llama.cpp, and KTransformers.