REFRAG: Meta’s framework delivers a 30x speed boost for RAG

By compressing retrieved documents into efficient embeddings, REFRAG slashes latency and memory costs without modifying the LLM architecture or response quality.

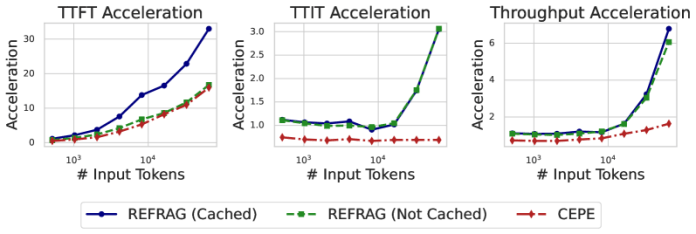

Meta’s new Superintelligence Labs has released a paper introducing a technique that optimizes retrieval-augmented generation (RAG) systems for significantly higher throughput and better performance. The technique, called REFRAG, makes response times 30.85 times faster without increasing perplexity, a measure of how well a model predicts text. This means it achieves a massive speedup without a loss in response quality. The framework also enables REFRAG to extend the context size of language models by 16X, allowing them to process much more information at once.

The limits of RAG

Large language models (LLMs) have shown impressive capabilities in learning from the context provided in their prompt. In RAG systems, this context includes documents retrieved from external knowledge bases. However, increasing the prompt length with more retrieved information leads to higher latency and greater memory consumption during inference. Longer prompts require more memory for the key-value (KV) cache, a mechanism that stores intermediate calculations to speed up generation. This cache scales linearly with the prompt's length.

This creates two major bottlenecks. First, the time-to-first-token (TTFT), or the delay before the model starts generating a response, increases quadratically with the prompt length. Second, the time-to-iterative-token (TTIT), the time to generate each subsequent word, grows linearly. As a result, the model’s overall throughput degrades with larger contexts, limiting its use in applications like web-scale search that demand high speed. The paper’s authors stress that “developing novel model architectures that optimize memory usage and inference latency is crucial for enhancing the practicality of contextual learning in these applications.”

The researchers argue that standard approaches overlook the unique structure of RAG contexts. They identify three key inefficiencies. First, RAG contexts often contain sparse or redundant information, yet computation is allocated for every token. Second, the retrieval process already generates useful encodings and relevance scores for the context chunks, but this information is discarded during the LLM’s decoding phase. Finally, retrieved documents are often unrelated, leading to sparse attention patterns that standard models don't exploit efficiently.

How REFRAG works

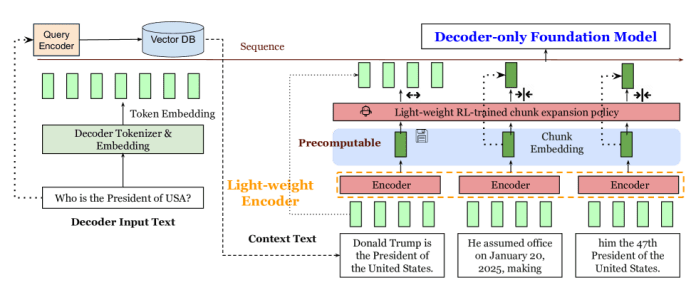

The paper proposes REFRAG (REpresentation For RAG), a novel mechanism for efficient decoding that reduces latency and memory usage without modifying the LLM's architecture. Instead of feeding the raw tokens from retrieved passages into the model, REFRAG uses pre-computed, compressed summaries called "chunk embeddings" as approximate representations.

The architecture consists of the main decoder-only foundation model (like LLaMA) and a lightweight encoder model. When a query is made, the retrieved context is broken into fixed-size chunks. The lightweight encoder processes these chunks in parallel to create a compact embedding for each one. These embeddings are then projected into the main model’s token space and fed into the decoder along with the original query to generate an answer.

Since the retrieved context is usually the largest part of the input, this compression significantly shortens the sequence length fed to the decoder. This design offers three main advantages: it improves token allocation efficiency, enables the reuse of pre-computed embeddings to eliminate redundant computation, and reduces attention complexity by having the model operate on a small number of chunks instead of thousands of tokens.

REFRAG can also perform "compression anywhere," meaning it can handle a mix of compressed chunks and full-text tokens at any position in the prompt. This capability is enhanced by a lightweight reinforcement learning (RL) policy that learns to selectively determine which chunks are critical enough to be passed as full text and which can be safely compressed.

REFRAG in action

The researchers validated REFRAG on diverse long-context tasks, including RAG, multi-turn conversations, and document summarization. They trained their models on the Slimpajama dataset and evaluated them against baselines like LLaMA-2 and CEPE, a state-of-the-art model for long-context efficiency. The results show that REFRAG models, with compression rates of 8x (REFRAG8) and 16x (REFRAG16), consistently outperform other baselines across nearly all settings while achieving lower latency than CEPE. They also maintain superior performance on contexts far longer than what they were trained on.

In RAG experiments, REFRAG’s compression allowed it to process more context information, leading to better performance. Surprisingly, REFRAG16 and REFRAG32 outperformed a fine-tuned LLaMA model despite processing 2-4 times fewer tokens in the decoder, which translates to lower latency. In scenarios with a less accurate retriever, REFRAG's ability to process a larger context allowed it to extract more useful information from irrelevant passages and outperform the baseline LLaMA model.

In multi-turn conversations, where context windows can quickly become exhausted, REFRAG maintained robust performance even with a large number of conversational turns and retrieved passages. Its compression approach allowed it to retain more of the conversational history without requiring modifications to the base model's positional encoding.

The researchers conclude, “Our results highlight the importance of specialized treatment for RAG-based systems and open new directions for efficient large-context LLM inference. We believe that REFRAG provides a practical and scalable solution for deploying LLMs in latency-sensitive, knowledge-intensive applications.”

With LLMs supporting longer context windows and capacity to process information at inference time, being able to optimize the documents you feed to a model becomes very crucial. This is where REFRAG can make a huge difference, providing a much better user experience without sacrificing the quality of the responses.

Meta’s new AI lab aims for the elusive dream of superintelligence. But it is refreshing to see that while they aim for the stars, they also remain grounded in solving very practical problems, which can end up playing an important role in future breakthroughs.