Self-Taught Evaluator automates LLM-as-a-Judge training

Researchers at Meta have released Self-Taught Evaluator, a technique that enables LLMs to automatically label their training data.

Researchers at Meta FAIR have introduced Self-Taught Evaluator, a technique that trains LLM evaluators without the need for human annotations.

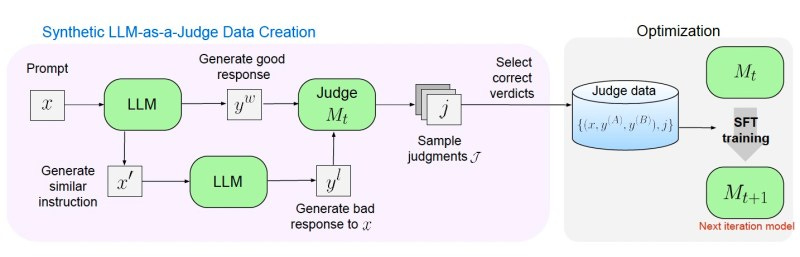

Self-Taught Evaluator is built on top of the LLM-as-a-Judge concept, where the model is provided with an input, two possible answers, and an evaluation prompt. The model must determine which response is better by generating a reasoning chain that reaches the correct result. The problem with this approach is that it usually requires a lot of human-annotated examples.

To address this bottleneck, Self-Taught Evaluator starts with a seed LLM and a large collection of unlabeled human-written instructions. It chooses a subset of the data that is diverse and representative of the problem. It then generates right and wrong answers for each example.

Next it uses an LLM-as-a-Judge prompt to create reasoning chains for each example and answer pairs. The valid reasoning examples and reasoning chains are used to train a base model, which is then used as the seed for the next iteration of the cycle.

Their experiments with the Llama-3-70B model show that Self-Taught Evaluator competes with manually-labeled evaluation datasets. The technique is promising for companies that want to train evaluators for their own proprietary data, but it has some caveats, including the need for a well-aligned seed model (the researchers used Mixtral-8x22B mixture of experts).

It is also worth noting that closed loops that rely on LLMs to rank their own outputs can often go into weird shortcuts that optimize for benchmarks but don’t work well on real-world data. But with the right human oversight, Self-Taught Evaluator can be a powerful tool for practical applications.

Read more about Self-Taught Evaluator on VentureBeat

Read the paper on arXiv.

Read more about LLM-as-a-Judge in this excellent post by Cameron R. Wolfe, Ph.D.