Separating deductive and inductive reasoning in LLMs

A study by UCLA and Amazon shows that LLMs are good at inductive reasoning but sloppy at deductive reasoning.

There are plenty of studies and demonstrations that show LLMs performing complex reasoning tasks. However, there are also plenty of examples that show LLMs failing at very simple reasoning tasks. There is still a lot of confusion about what the models are really doing when solving reasoning problems.

While we still have a lot to learn about what is happening under the hood, a new study by researchers at UCLA and Amazon does a great job separating deductive and inductive reasoning abilities.

Deductive reasoning is when you are provided with a set of rules and formulas and apply them to new inputs. Inductive reasoning, on the other hand, happens when you take a set of observations and try to infer the underlying rule that maps the inputs to the outputs. Consider converting Celsius to Fahrenheit temperatures. Deductive reasoning is when you’re given the formula and apply it to new Celsius readings. Inductive reasoning is when you’re given a set of observations and try to infer the underlying conversion formula.

To separate inductive from deductive reasoning, the researchers developed a series of tests that go from pure deductive reasoning to pure inductive reasoning. On the one end of the spectrum, the model is given a mapping function and is told to apply it to new inputs without being provided with solved examples (pure deductive reasoning). On the other end of the spectrum, the model is provided with a series of solved examples and is instructed to infer the underlying formula (pure inductive reasoning).

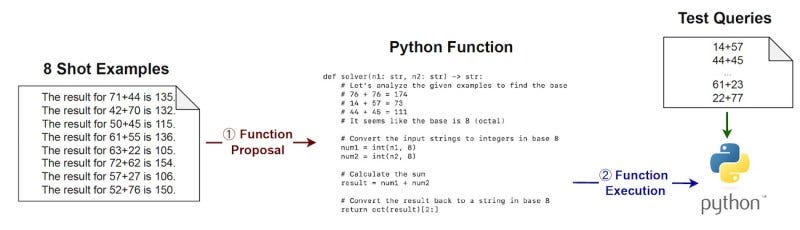

To further ensure that the model is really doing induction, the researchers developed SolverLearner, a framework that uses a two-step process to infer the mapping function of a series of observations. First, the model proposes a formula implemented in Python. In the second step, a code interpreter is used to verify the formula.

The researchers tested GPT-3.5 and GPT-4 on a series of tasks, including syntactic reasoning, arithmetic operations, and spatial reasoning. Their findings show that the models are very poor at deductive reasoning, especially when provided with examples that deviate from their training examples (e.g., arithmetic on base 9 or 11). But they achieve near-perfect performance on inductive reasoning with SolverLearner.

This tells us a lot about how far we can trust LLMs in reasoning tasks, especially in counterfactual settings.

Read more about the study on VentureBeat.

Read the paper on arXiv.

That's actually intriguing because inductive reasoning is what creativity (including scientific discovery) relies on. Deduction, by comparison, is less tricky and challenging. Just let an 80s-style production system in Prolog handle deduction and you'll be fine.

This makes sense given chatbots are trained. They need to recognize when they need to use tools rather than produce tokens.