Settling the LLM training vs inference debate

It depends on how difficult the problem is.

With LLMs being considered quasi-general problem solvers, there is an ongoing debate over how far you can push the model’s abilities through prompting techniques as opposed to opting for a larger pre-trained model.

A new research by UC Berkeley and DeepMind explores the training vs inference compute tradeoff in depth, and the results are interesting.

One of the most common ways to improve the LLM’s response is to use “best-of-N” sampling, where you sample N responses from the model and use a verifier to choose the best answer.

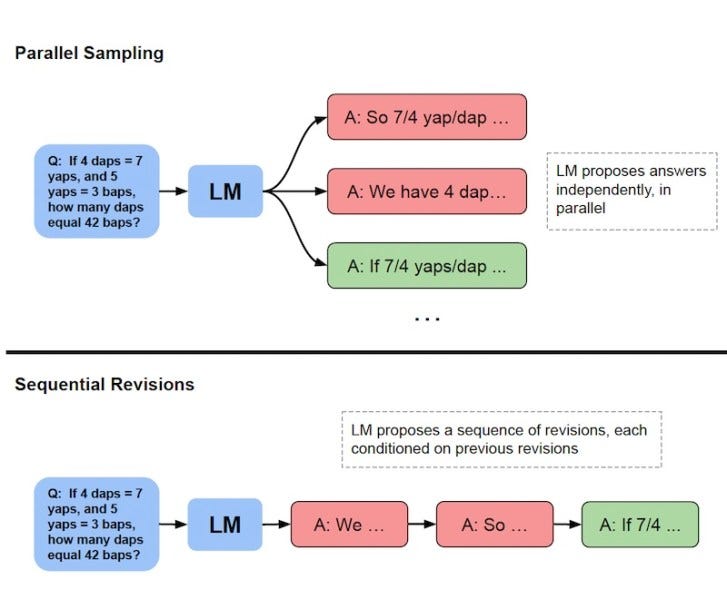

Using best-of-N as baseline, the researchers test two types of modifications that can improve the results. The first is to change the sampling mechanism. For example, instead of generating N responses in parallel, you can have the model revise and correct its response in N sequential steps.

The second is to change the verification mechanism that chooses the best-produced response. The researchers examined several verifiers, including simple, beam search, and lookahead search.

Their main finding is that the best sampling and verification strategy depends on the difficulty of the input question. If you train a model to determine the difficulty of the question, you can choose the optimal strategy to solve it, given a fixed inference budget.

For example, easy questions can be solved through best-of-N sampling because the model is more likely to get the answer right in a single shot. For more difficult questions, you will need to choose a sequential sampling along with more complex verification method. The researchers define this as “test-time compute-optimal scaling strategy.”

They find that given a fixed inference budget and with the right test-time compute-optimal scaling strategy, you can get up to 4x better results in comparison to naive best-of-N sampling.

They also tested their inference techniques against pre-training larger LLMs. Their findings show that a small LLM can match the quality of an LLM that is 14x larger on easy and medium questions if it is given room for more sampling and verification. However, on difficult questions, you will still need larger LLMs that can learn to solve the problem.

There is still room to improve the technique and explore more combinations of sampling and verification. But it is a promising direction of research that can have direct implications for LLM applications in different settings, including models that run exclusively on user devices.

The research “hints at a future where fewer FLOPs are spent during pretraining and more FLOPs are spent at inference,” according to the researchers.

Read more about the study on VentureBeat

Read the paper on arXiv