Stanford's LLM tool-use framework outperforms AutoGen and LangChain

OctoTools uses a modular framework to enable general-purpose LLMs to use external tools efficiently.

Researchers at Stanford University have released OctoTools, a new open-source agentic platform that removes the technical barriers of tool use in large language models (LLMs).

Tool use is one of the solutions to boost the ability of LLMs to handle reasoning tasks. Tools can include calculators, code interpreters, web search, image processors, and more. With access to tools, the LLMs can focus on high-level planning of the solution and defer the individual steps to the tools.

However, tool use is challenging. It usually requires substantial training or heavy prompt engineering. And once LLMs are optimized for a specific set of tools, it becomes difficult to expand them to other domains. They can also get confused when having to orchestrate multiple tools to solve a problem.

OctoTools is a training-free agentic framework that can orchestrate multiple tools without fine-tuning or reconfiguring the models. OctoTools uses a modular approach to make any general-purpose LLM into a tool-using model.

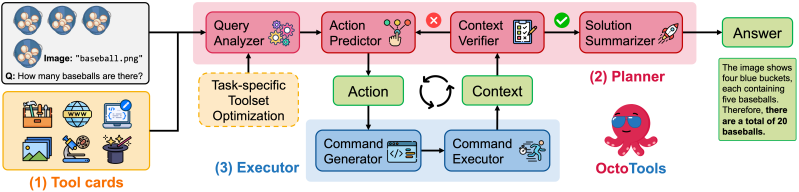

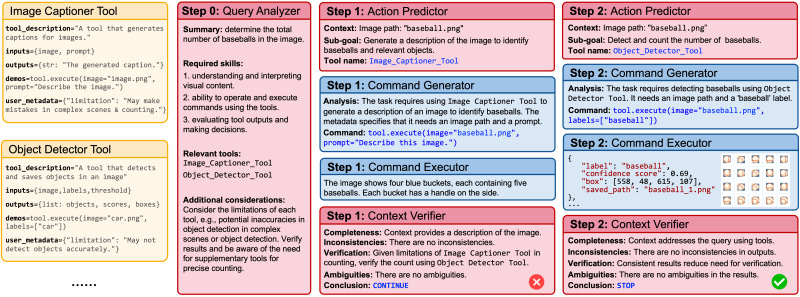

OctoTools uses “tool cards” to describe tools, including input-output formats, limitations, and optimal use cases. A “planner” module processes the incoming prompt and generates a high-level plan using the backbone LLM. In addition to the step-by-step solution, the planner identifies the skills and tools required to accomplish the task. For each step in the plan, an “action predictor” module refines the sub-goal to specify the tool required to achieve it and make sure it is executable and verifiable.

A “command generator” module translates the text-based plan to Python code that invokes the specified tools for each sub-goal. The “command executor” module then runs each command in a Python environment and passes the result to the “context verifier” module for validation. The final result is consolidated by a “solution summarizer.”

OctoTools also uses an optimization algorithm to select the best subset of tools for each task. This helps avoid overwhelming the model with irrelevant tools.

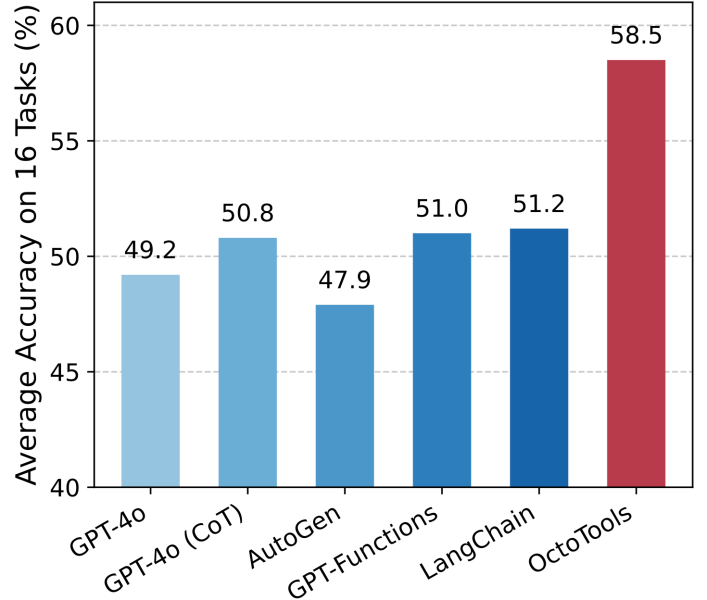

OctoTools achieved an average accuracy gain of 10.6% over AutoGen, 7.5% over GPT-Functions, and 7.3% over LangChain when using the same toolset in different benchmarks, including visual, mathematical, and scientific reasoning; agentic tasks, and medical knowledge . According to the researchers, the reason for OctoTools’ better performance is its superior tool usage distribution and the proper decomposition of the query into subgoals.

Frameworks like OctoTools can be an important part of building agentic AI systems that rely on orchestrating LLMs with external tools. The code for OctoTools is available on GitHub. There is also an online demo where you can try different prompts and see the trace of how the model reasons and uses different tools to generate the answer.