Testing long-context LLMs beyond retrieval tasks

Frontier models can retrieve information from millions of tokens, but can the reason over content structure?

LLMs with very long context windows have proven to be very good at retrieving bits of information from among millions of tokens.

But retrieval doesn’t necessarily reflect a model’s capacity for reasoning over the entire context. A model might be able to find a specific fact without understanding the relationships between different parts of the text. In fact, a new study by researchers at DeepMind shows that frontier models struggle with reasoning tasks that require understanding the structure of long-context data.

Unfortunately, current long-context benchmarks mostly measure the retrieval capabilities of long-context LLMs and are variations of the “needle in a haystack” test, which tests the models’ ability to find a specific piece of information in a very long document or set of documents. On the other hand, reasoning benchmarks are mostly designed for short contexts, and extending requires extensive manual labor and is prone to data leakage.

Join Spectra 2024 virtually on October 23rd to hear from leaders at Particle, Qualcomm, Edge Impulse, tinyML Foundation, MLCommons, and Useful Sensors, who will equip you with the necessary tools to unlock the next wave of intelligent devices along with the insights you need to cut through the buzzwords and hype.

To overcome this shortcoming, the DeepMind researchers have introduced Michelangelo, a “minimal, synthetic, and unleaked long-context reasoning evaluation for large language models.”

Michelangelo is based on the saying from the famous Renaissance sculptor: “The sculpture is already complete within the marble block, before I start my work. It is already there, I just have to chisel away the superfluous material.”

Accordingly, the Michelangelo benchmark evaluates a model's ability to understand the relationships and structure of the information within its context window, rather than simply retrieving isolated facts (sculptures from marble rather than needles from haystacks).

The tasks in Michelangelo are based on Latent Structure Queries (LSQ), a novel framework for designing long-context reasoning evaluations that can be extended to arbitrary lengths. LSQ tasks can test a model's understanding of implicit information as opposed to retrieving simple facts.

LSQ has been designed to avoid flaws that would allow the model to short-circuit the answer of a reasoning task. It also uses synthetic data in a way that allows the control of task complexity and context length independently.

The researchers propose three tasks for Michelangelo based on the LSQ framework:

Latent list: The model is given a Python list and a long sequence of operations, some of which are redundant or irrelevant. It must be able to determine the final state of the list.

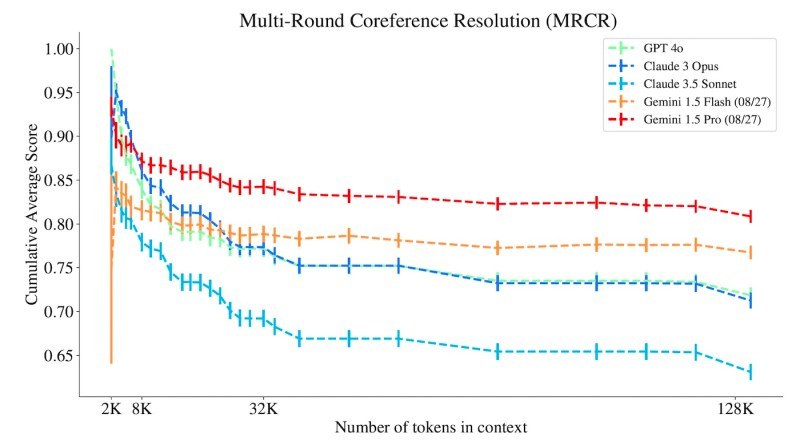

Multi-round co-reference resolution (MRCR): The model is given a long conversation between a user and an LLM, it must produce parts of the conversation and resolve references to previous turns, even when the conversation contains confusing or distracting elements.

“I don’t know” (IDK): The model is given a long story and several multiple-choice questions. The model must be able to recognize the limits of its knowledge and respond with “I don’t know” whenever it faces a question for which there is no answer in the context.

The researchers evaluated different variants of Gemini, GPT-4, and Claude on Michelangelo. All models exhibited a significant drop in performance as the complexity of the reasoning tasks increased. This shows that current LLMs have much room to improve in their ability to reason over large amounts of in-context information.

Michelangelo can become an important benchmark for evaluating the real capabilities of future generations of LLMs, especially as context windows continue to grow to millions of tokens, creating the possibility for new applications.

Read my interview with the coauthor of the Michelangelo paper on VentureBeat

Read the paper on arXiv

Don't miss your chance to learn from some of the most prominent names at the intersection of edge AI and IoT at Spectra 2024!