The AI race is still wide open: Lessons from Dia 1.6B

The innovative Dia 1.6B text-to-speech model showcases the potential of small teams, proving that creativity can challenge established giants in AI technology.

Dia 1.6B, an open-source text-to-speech model by Nari Labs, has taken the internet by storm in the past week. Dia 1.6B can run on consumer-grade hardware with 10GB of VRAM and creates natural dialogs that rival the quality of leading proprietary AI products such as Eleven Labs and NotebookLM.

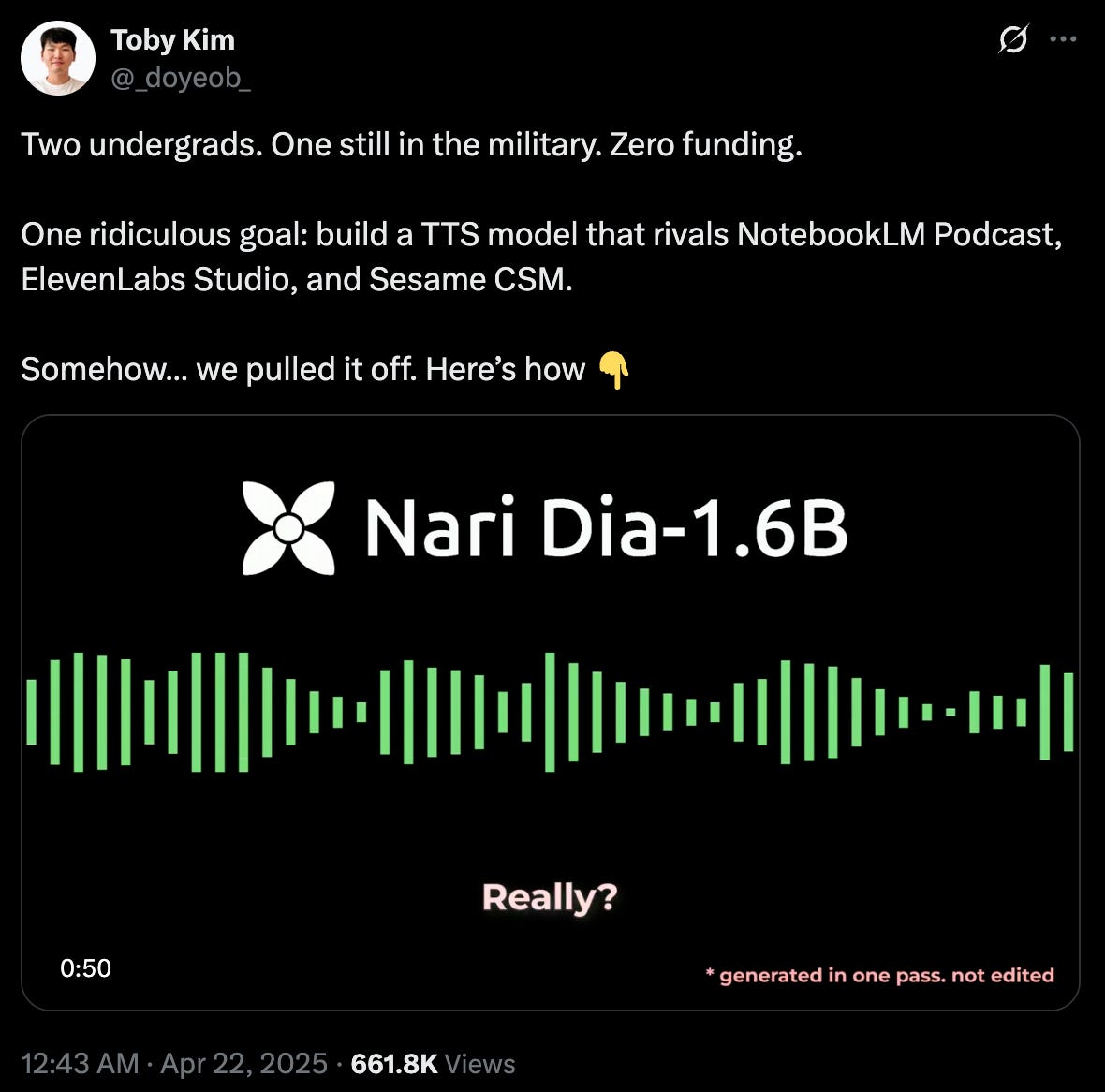

Nari Labs is run by two undergrads with no funding. Dia 1.6B is currently trending on Hugging Face, ahead of models created by companies spending millions of dollars on research and training.

This is just a reminder of the exciting times we’re in. There is no clear winner in the AI race, AI moats are constantly being upended, and small players still have big chances to take the lead and make new markets or conquer existing ones.

What is Dia 1.6B

Dia 1.6B is based on the transformer architecture. One of Dia’s standout features is its ability to generate entire dialogues in a single pass. Unlike traditional TTS models that produce speech for each speaker separately and then stitch the outputs together, Dia creates seamless, coherent conversations in one go.

This approach enhances its utility for applications requiring fluid multi-speaker interactions, such as virtual assistants or interactive storytelling systems. The model also supports audio conditioning, allowing users to influence the emotion and tone of the output by providing audio prompts. Another impressive capability is zero-shot voice cloning, where Dia can replicate a speaker’s voice from a brief audio sample without additional training. Additionally, it can produce non-verbal sounds like laughter, coughing, or throat clearing, adding a layer of realism rarely seen in TTS systems.

Released under the Apache 2.0 license, Dia is fully open-source and available for adoption in commercial applications.

Architecture and data

The team has not yet released a paper or technical report on how they designed Dia 1.6B. The model is open weights, so the architecture is visible. In a Hacker News thread, the founders of Nari said, “We started this project after falling in love with NotebookLM’s podcast feature. But over time, the voices and content started to feel repetitive. We tried to replicate the podcast-feel with APIs but it did not sound like human conversations.”

They trained the model in around three months, with no prior experience with speech models and having “to learn everything from scratch — from large-scale training, to audio tokenization.”

They also added that their work was inspired by SoundStorm and Parakeet.

Parakeet is an open source speech recognition and transcription model developed by Nvidia and Suno.ai. It transcribes speech. SoundStorm is an open source audio generation model developed by Google.

In a lengthy X thread, the user DFG has unpacked everything we know about the architecture of Dia 1.6B

However, we still don’t know much about the data that went into it. All we know is that the team was not VC-funded and only granted computation resources by the Google TPU Research Cloud program and Hugging Face.

Lots of low-hanging fruit

One of the standout features of Dia 1.6B is that it was built on top of existing open source models and research. While commercial AI labs are trying to hide their research and models to maintain their market advantage, there is still a lot of untapped potential in putting together existing techniques and models in the right way and making small tweaks that make them more efficient.

We saw this play out with DeepSeek-R1, and we’re seeing it again with Dia 1.6B. Big AI labs will continue to battle it out by raising huge rounds of funding, creating huge compute clusters, spending billions of dollars on training models and raising walls around their share of the market. Meanwhile, cash-strapped, nimble, and talented teams like Nari will continue to prove that if you put your mind to it, you can beat the giants at their game.