The power of embedded chain-of-thought reasoning for robotics

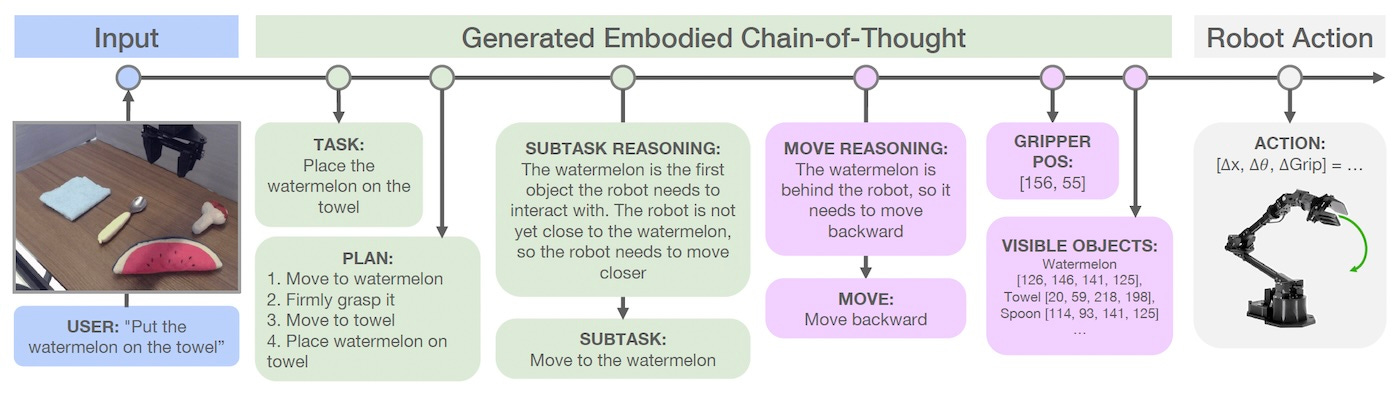

ECoT is a new technique that enables VLA models to reason about tasks, environment, and the robot's state to solve novel tasks in novel environments.

Chain-of-thought (CoT) is an effective technique that improves the performance of LLMs on reasoning tasks. But bringing CoT to robotics that are powered by LLMs and VLMs presents unique challenges.

Embedded CoT (ECoT), a new technique developed by researchers at University of California, Berkeley, Stanford University, and University of Warsaw addresses these challenges.

ECoT is designed for vision-language-action (VLA) models, foundation models that have been designed specifically for robotics tasks. VLAs are usually VLMs that have been modified and trained to directly map language instructions and visual input to robotic actions.

In LLMs, CoT works by adding intermediate steps that lead from the input prompt to the solution, sometimes referred to as “step-by-step thinking.” Adding CoT capabilities to VLAs has several challenges.

In addition to reasoning about the logic of solving the task, VLAs must also take into account the environment and the robot’s own state.

“Put simply, we need VLAs to not only ‘think carefully’, but also ‘look carefully,’” the researchers write.

ECoT solves this challenge by combining semantic reasoning about tasks and sub-tasks with “embodied” reasoning about the environment and the robot’s state. This includes predicting object bounding boxes, understanding spatial relationships and reasoning about how the robot’s available actions, also called “primitives,” can help achieve the goal.

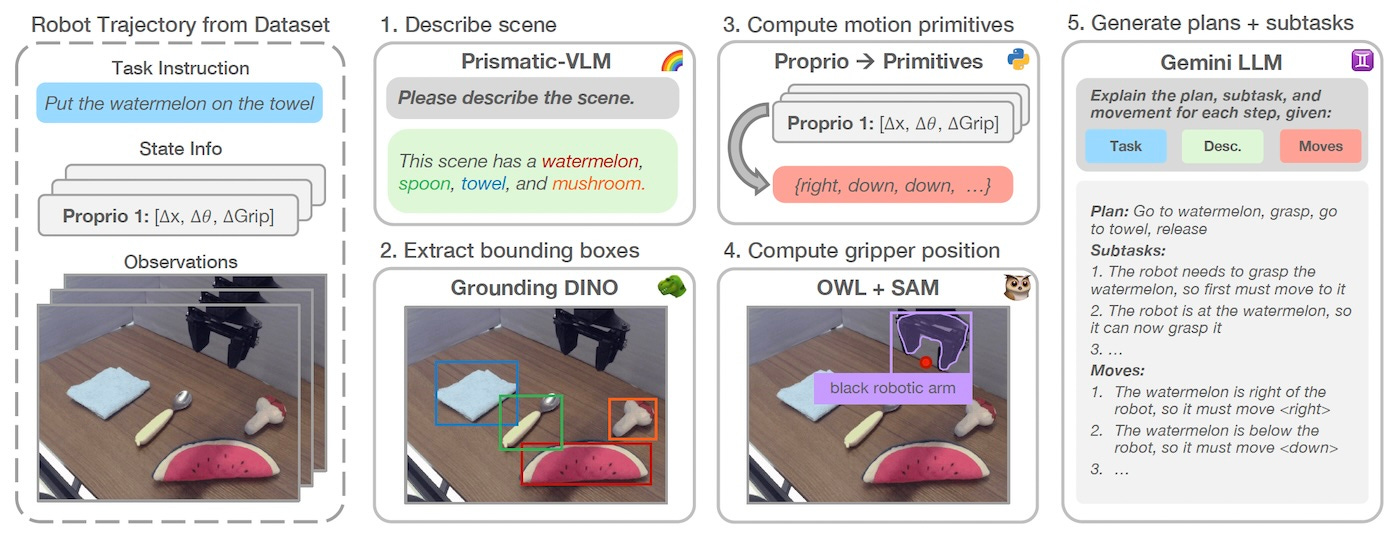

Another challenge is creating the training data for ECoT. The researchers solved this challenge by creating an automated pipeline that augments existing robot training datasets with ECoT annotations. The pipeline uses VLMs and frontier models such as Gemini to add scene descriptions and reasoning chains to robotic trajectories. For their experiments, they used the pipeline to create ECoT examples on a dataset that contained tens of thousands of trajectories for the WidowX robotic arm.

For their experiments, the researchers trained the popular open source OpenVLA model on the ECoT training examples. The resulting outperforms both the base OpenVLA and the much larger Google RT-2-X on diverse robotics tasks in novel environments.

ECoT significantly increased the task success rate by 28% compared to the baseline OpenVLA model. Notably, these improvements were achieved without gathering additional robot training data, which can be expensive and time-consuming.

Read paper on Arxiv

Read more about ECoT on VentureBeat