What to know about custom evals for LLMs

Public benchmarks are misleading. For real-world applications, you need custom evals.

Before LLMs, creating machine learning applications required long development and training cycles. Now, thanks to LLMs, you can get a working prototype with nothing more than good prompt engineering techniques and a few API connections.

However, the benefits of LLM applications aren’t without tradeoffs. You still need a systematic approach to evaluate and improve the quality of your prompts and LLM pipelines. Without it, you’ll end up getting mixed results and make random changes without being able to measure their effect on the end result.

Currently, providers of LLMs measure and publicize the performance of their models based on their score on public benchmarks such as MMLU, MATH, and GPQA. But most enterprise applications want to measure performance on very specific tasks.

For this, enterprises need to create custom evals for their applications. Custom evals present the model with a set of carefully crafted inputs or tasks and measure its outputs against predefined criteria or human-generated references. Unlike evals in classic ML applications, LLM evals don’t test the model per se but rather the prompt and scaffolding that is built around it.

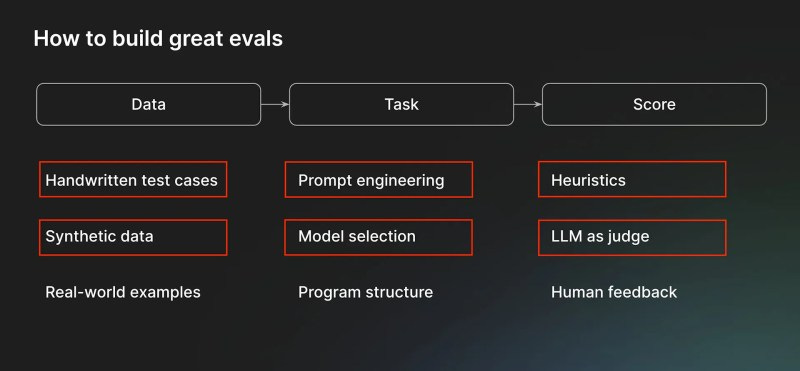

A custom eval consists of three key components: data, task, and score. The data can be handcrafted examples, synthetic data created with models or automation tools, or data collected from end users such as chat logs and tickets.

The task in enterprise applications represents more than a simple question-answer sequence as seen in public evals—it encompasses the entire framework in which the model(s) is used. It might be composed of several steps, prompts, and models. It might include non-LLM components and external tools.

The scoring function grades the results of your framework. It can be a rule-based function that can check well-defined criteria. For more complex tasks such as text generation and summarization, you can use LLM-as-a-judge methods.

Good evals are the foundation of strong and reliable LLM applications. With a good eval framework, you can easily upgrade or switch your models without fear of breaking the application.

For more on evals, see my interview with BrainTrust CEO Ankur Goyal on VentureBeat