Meta’s new memory layer can scale LLM knowledge without raising compute costs

Sparse lookup-style layers can help LLMs memorize more facts and knowledge.

Researchers at Meta have proposed “scalable memory layers,” a neural network layer architecture that can help LLMs store more factual knowledge without significantly increasing compute costs.

Traditional language models encode information in dense layers, where all parameters are used at their full capacity and activated during inference. As they grow larger, dense layers can learn more complex functions, but they also require more compute power for inference.

In contrast, memory layers store information through sparse connections, which means very few neurons are activated at any one time during inference. This makes them more efficient and suitable for simple information that can be stored in associative structures, such as lookup tables. Memory layers provide a tradeoff, where you use more memory in exchange for lower compute requirements.

Memory layers have existed for a long time but are not optimized for current hardware accelerators. In their traditional form, memory layers provide challenges for current hardware. The Meta researchers propose several modifications that solve these challenges and make it possible to use them at scale:

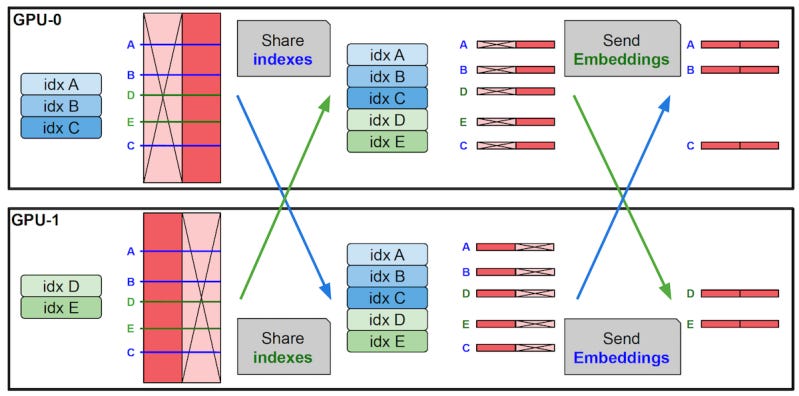

1- They use parallelization to distribute memory layers across several GPUs, making it possible to store millions of key-value pairs without disrupting other layers in the model.

2- They developed a special CUDA implementation for high-memory bandwidth operations.

3- They developed a parameter-sharing mechanism that makes it possible to share a single set of memory parameters across multiple memory layers within a model.

Memory layers with their sparse activations are meant to complement dense networks. The dense layers perform the complex operations while memory layers store simple factual knowledge.

The researchers modified Llama models by replacing one or more dense layers with a shared memory layer. They compared the memory-enhanced models against the dense LLMs as well as mixture-of-experts (MoE) and PEER models on several tasks, including factual question answering, scientific and common-sense world knowledge, and coding.

According to their findings, memory models can compete with dense models that use 2-4x more compute and match the performance of MoE models that have the same compute budget and parameter count. Memory-enhanced models are especially good for tasks that require factual knowledge. The researchers also found that the benefits of memory models remain consistent as they scaled the models from 134 million to 8 billion parameters.

With proper hardware optimization, memory layers can become an important component of future LLMs, enabling less forgetting, fewer hallucinations, and continual learning.