The alternatives to o1 and o3

OpenAI o1 and o3 are very effective at math, coding, and reasoning tasks. But they are not the only models that can reason.

OpenAI’s o1 and the latest o3 model have generated a lot of excitement around the new inference-time scaling law. Basically, the premise is that by giving the model more time to “think,” you can improve its performance on difficult tasks that require planning and reasoning. And both models have made advances in reasoning, math, and coding benchmarks that were very difficult for large language models (LLMs).

When given a prompt, o1 and o3 use more compute cycles to generate extra tokens, generate multiple responses, review their answers, make corrections, and evaluate different solutions to come to the final answer. It has proven to be especially useful for tasks such as coding, math, and data analysis.

However, as has become the norm with OpenAI, both o1 and o3 are very secretive. They don’t reveal their reasoning chain, making it difficult for users to understand how they work. But this hasn’t stopped the AI community from trying to reverse engineer and reproduce the capabilities of these large reasoning models (LRMs). And there are a few papers and models that hint at what’s happening under the hood.

Qwen with Questions (QwQ)

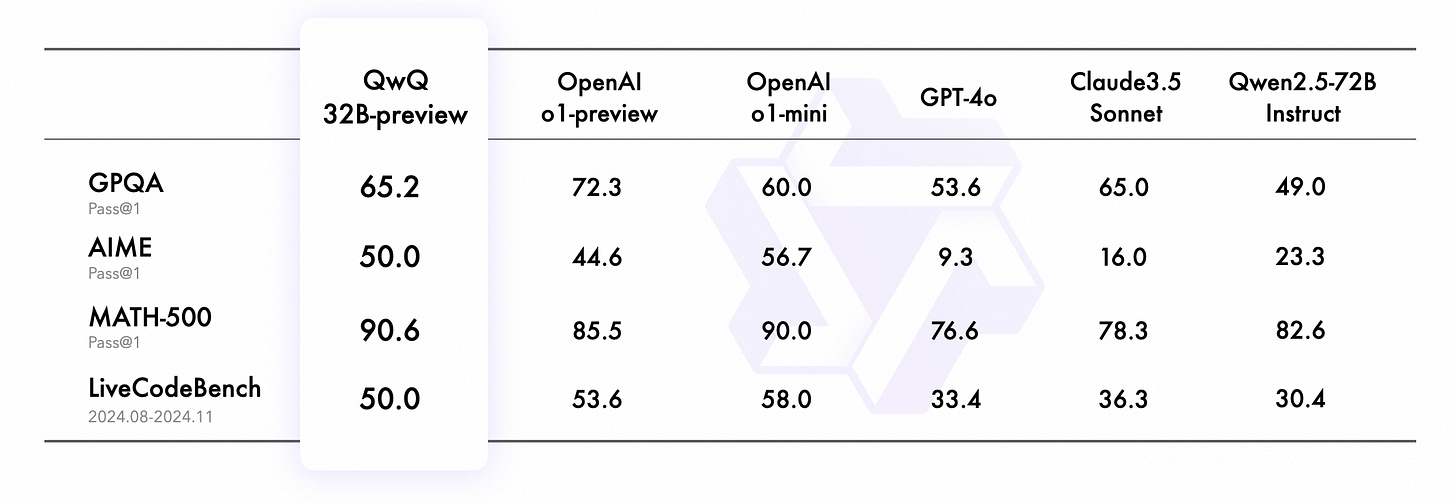

QwQ is an open model released by Alibaba. It has 32 billion parameters and a 32,000-token context window. According to Alibaba’s published tests, QwQ beats o1-preview on the AIME and MATH benchmarks. It also outperforms o1-mini on the GPQA benchmark for Google-proof scientific reasoning.

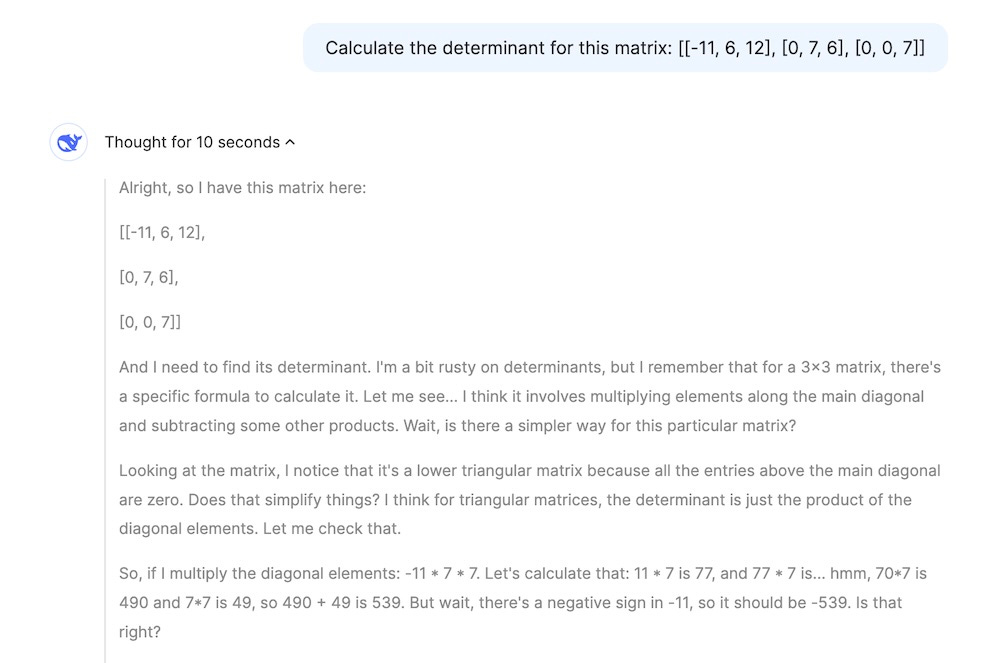

Unfortunately, the Qwen team has not released details about the process and data used to train the model. However, the model fully displays its reasoning chain, giving users better visibility into how the model processes requests and handles logical questions.

QwQ is available for download on Hugging Face and there is an online demo on Hugging Face Spaces.

Marco o1

Alibaba has released another model, Marco o1, another reasoning model that uses inference-time scaling to answer complex questions. Marco-o1 is a version of Qwen2-7B-Instruct that has been fine-tuned on the Open-O1 CoT dataset and custom chain-of-thought (CoT) and instruction-following datasets curated by the Alibaba team.

At inference time, Marco-o1 uses Monte-Carlo Tree Search (MTCS) to explore multiple reasoning paths as it generates response tokens. The model explores and evaluates the different paths based on the confidence score of the generated tokens in each tree branch. This enables the model to consider a wider range of possibilities and arrive at more informed and nuanced conclusions, especially in scenarios with open-ended solutions.

Marco-o1 also uses a reflection mechanism to review its answers and reasoning steps periodically to identify potential errors and correct course.

Like QwQ, Marco-o1 fully reveals its reasoning chain. Marco-o1 is better than classic LLMs at handling math and coding tasks. But it is especially good at handling open-ended problems that do not have a clear answer. For example, in the paper, the researchers show how the model’s reasoning capabilities enable it to improve its ability to translate colloquial terms from Chinese to English. Marco-o1 is available on Hugging Face.

DeepSeek R1-Lite-Preview

DeepSeek has released a reasoning model that rivals o1. It is not open source and is only available through its DeepSeek Chat web interface.

The model shows both its internal thoughts and a final summary of its reasoning process before displaying the answer, which is a nice advantage over o1 and o3.

According to DeepSeek, R1 outperforms o1-preview on AIME and MATH. There is little information on how the model was trained or the technique it uses to generate its reasoning tokens. However, the company has hinted at releasing open source models in the future.

More investigations on inference-time scaling

In August, DeepMind released an interesting study that explored the tradeoff between training- and test-time compute. The paper answered the question that, given a fixed compute budget, would you get better performance by spending it on training a larger model or using it at inference time to generate more tokens and revise the model’s answer (full paper here)? The study provided guidelines to dynamically allocate compute resources and get the best results.

Researchers at Hugging Face recently used this study to turbocharge small language models (SLMs) to the point that they outperformed models that were an order of magnitude larger. For example, the Llama-3.2 3B model was able to outperform the 70B version of the model on the difficult MATH benchmark.

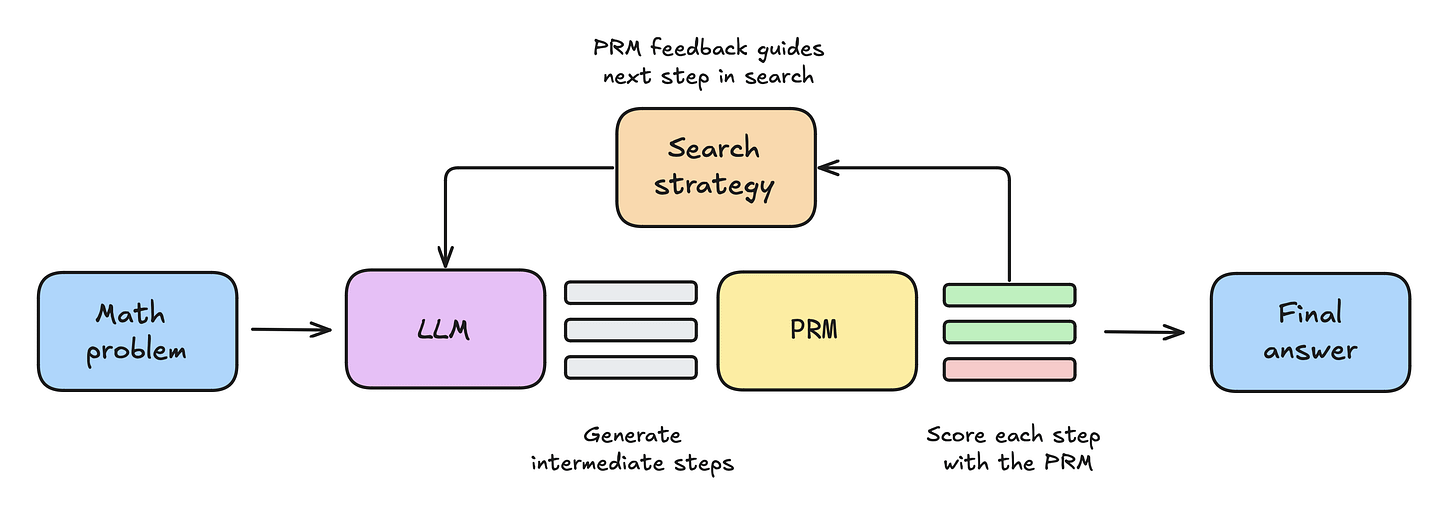

The key to its success was the clever use of inference-time compute resources. The system uses a reward model and a search algorithm to generate and revise multiple answers. For each query, the model produces several partial answers. Then a process reward model (PRM) reviews the answers and rates them based on their quality. A special tree search algorithm helps the model branch out on the promising answers and expand them into different paths. This process is repeated until the model reaches its final answer. This technique is substantially more efficient than the classic “majority vote” mechanism, in which the model generates multiple answers and chooses the one that shows up more frequently.

Does o3 make a difference?

OpenAI’s o3 is still fresh and we have only seen it through the demos and reports published by OpenAI. From what we know, it makes impressive advances on some key benchmarks, including the coveted ARC Challenge, which some consider a major stepping stone toward artificial general intelligence (AGI).

o3 proves that the test-time scaling law still has a lot of untapped potential. However, it is not clear whether it will take us to AGI. For the moment, we know that the combination of LLMs, reward models, and search algorithms (and possibly other symbolic structures) can help us solve complex problems for which the result can be clearly quantified. This is why models like o1 and o3 are very good at math and coding but inferior to GPT-4o in creative tasks.

It will be interesting to see how we can leverage these newfound abilities to solve new problems or revisit old ones.

It will be interesting to see how or if Meta’s new Coconut framework alters this even more. What do you think?