The application layer strikes back: Cursor's custom LLM

How co-designing an LLM with its environment created a specialized coding model 4x faster than its frontier-level peers.

The AI-assisted coding tool Cursor has released Composer, its first proprietary large language model (LLM) designed specially for its integrated development environment (IDE). Composer is optimized for speed and is trained specifically for “agentic” workflows, where autonomous AI agents can plan, write, and test code within a developer’s environment. The release signals a shift for a company that built its “vibe coding” platform on top of leading models from OpenAI, Anthropic, and Google. And it could have important implications for the future of one of the most important segments of the LLM market.

The architecture and training of Composer

Composer is not an open source model, so we don’t have exact details of its size and architecture aside from what has been shared in the Cursor. According to the blog post that announced the model, Composer is a mixture-of-experts (MoE) language model specialized for software engineering. ( MoE models are composed of several “expert” components that are specialized for different tasks. For every input, a subset of the total experts are activated that are relevant to that request, making the model more resource efficient in comparison to dense models, where all neurons are activated for every request.)

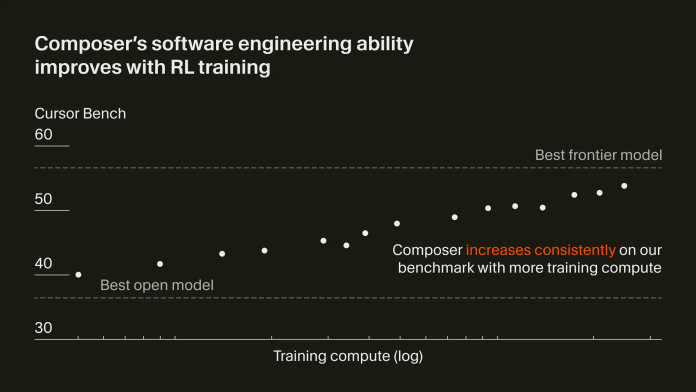

Composer was possibly built on top of a base open source model. It was fine-tuned through reinforcement learning (RL) within a diverse range of development environments (RL has become an important part of post-training of reasoning models such as DeepSeek-R1). During training, the model was given access to a set of production tools, including file editors, semantic search, and terminal commands (the basic set of tools software engineers use), and was tasked with solving real software engineering problems.

This training method incentivized the model to make efficient choices and avoid unnecessary responses. Over time, it developed useful emergent behaviors on its own, such as performing complex code searches, independently fixing linter errors, and writing and executing unit tests to validate its own work.

Built for speed and interaction

To measure the model’s performance, Cursor developed an internal evaluation suite called Cursor Bench. This benchmark uses real agent requests from its own engineers and measures not just the correctness of the code but also its adherence to existing software engineering practices and abstractions within a codebase.

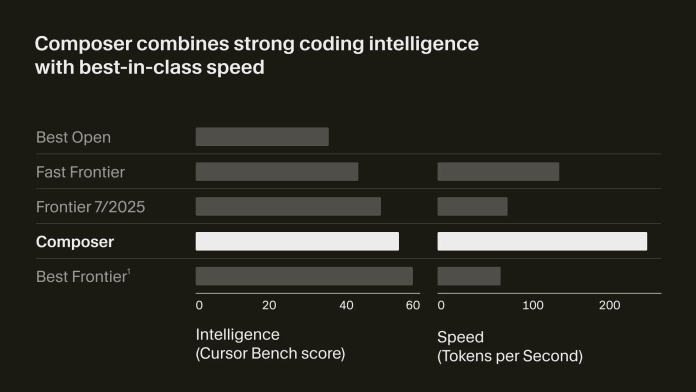

On this benchmark, Composer achieves frontier-level intelligence while generating code at 250 tokens per second. This is approximately four times faster than models with comparable reasoning abilities. While top-tier models like GPT-5 and Claude Sonnet 4.5 still outperform Composer in raw intelligence, Composer’s speed places it in a class of its own for interactive use.

This focus on speed is intentional. The company first explored low-latency coding with an earlier prototype model named Cheetah. The goal was to create a model fast enough to keep a developer in their creative flow. Composer is a smarter evolution of this concept, maintaining the same responsiveness while significantly improving its reasoning and problem-solving skills.

Basically, when the speed of response passes a certain threshold, then you can unlock new capabilities, such as a model that is processing thousands of tokens in the background and giving you near-real-time feedback or suggestions on your code.

Cursor was able to develop Composer because its platform and the model were “co-designed.” According to Sasha Rush, researcher at Cursor, the team built the model and the Cursor environment together, which allowed them to run the training agent at the necessary scale for production-level tasks.

This required a significant investment in custom infrastructure. The team built its training system with PyTorch and Ray to manage asynchronous reinforcement learning across thousands of GPUs. To support the training process, Cursor adapted the virtual machine scheduler from its Background Agents feature to handle the massive scale of running hundreds of thousands of sandboxed coding environments concurrently.

Composer is the technical core of Cursor 2.0, an updated development environment that supports a multi-agent interface. This allows developers to run up to eight AI agents in parallel, each working in an isolated workspace, to perform tasks independently or collaboratively on a single codebase.